PowerPoint presentation given to Imperial College London

Mars imaged with ZWO ASI224MC

Planetary Imaging with a One Shot Colour Camera

A revolution in planetary imaging has been quietly happening over the past few years whereby one shot colour planetary cameras (OSC) are now able to complete with the results produced by mono cameras and multiple filters. The time savings over the mono method I’ve described in previous blogs is enormous, instead of shooting multiple sequences through three or four different filters only one sequence is required, reducing imaging time and the chance of those nasty clouds ruining your hard won dataset!

Capturing Your Images

All my previous comments regarding the capture of your images apply here, you can revisit them in my posts on high resolution imaging of the Moon or my guide to planetary imaging. To summarise:

focus is vital, no amount of skill will compensate for a blurry dataset, an electronic focuser is a fantastic tool for achieving good focus as you don’t need to touch the telescope eliminating vibrations. If you can I would really recommend getting one.

wait until your target is at its highest point in the sky, this will ensure you are shooting through the minimum amount of atmosphere. This is why it is best to image outer planets around opposition as they will be at their closest to Earth and reach a good altitude (if you are lucky)

if you are imaging at long focal lengths and your image is dancing on the screen resembling the view through boiling water you may want to pack up and try another night. Bad seeing is something you can do nothing about. Imaging just after dusk or just before dawn often offers the most stable conditions.

transparency or the ‘murkiness’ of the atmosphere will soften your images, best results will be on nights of good transparency.

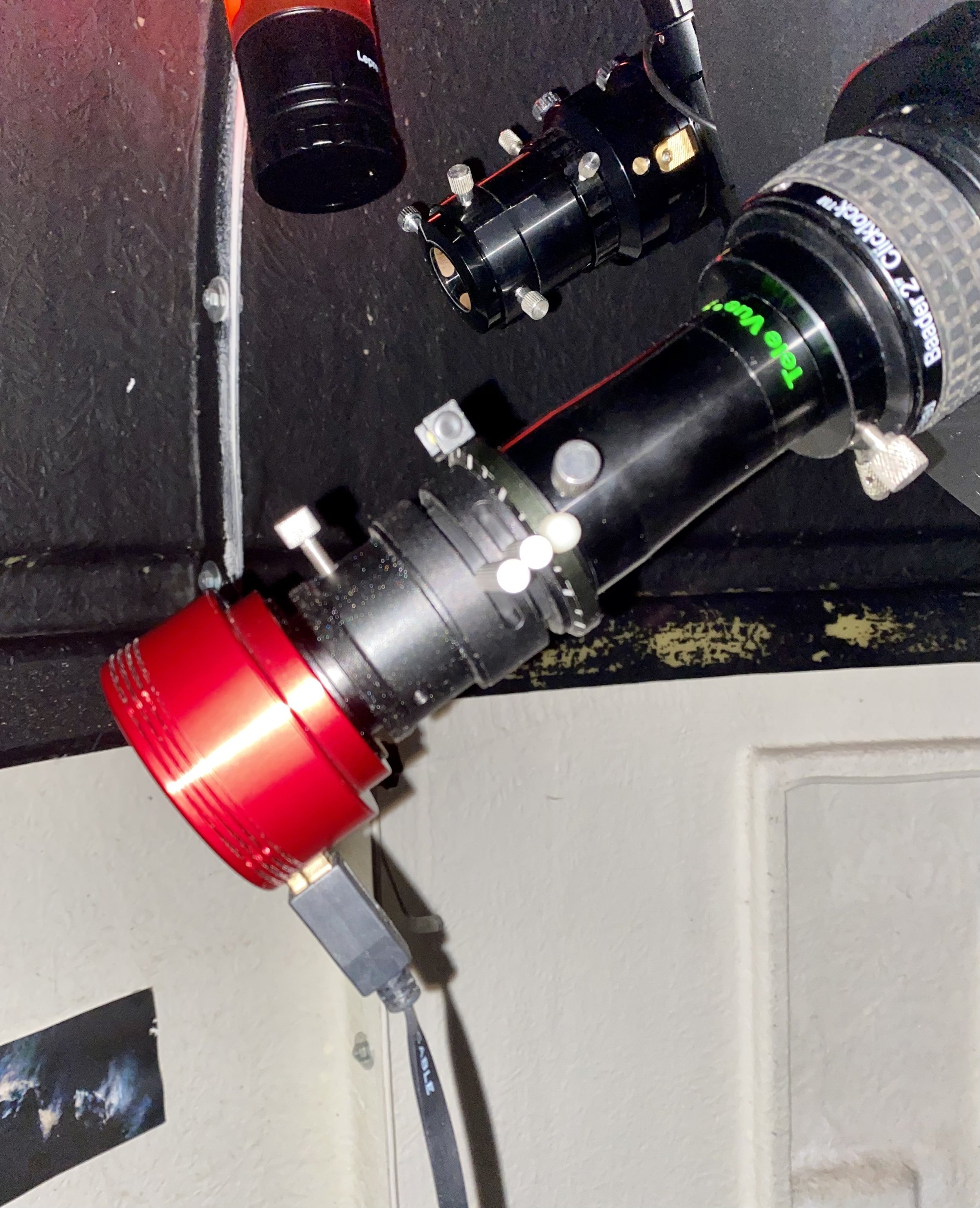

In addition to the points above when using a colour camera an ADC is pretty much vital for best results. In the image below the ADC is just in front of the camera in the imaging train.

Imaging Train (L-R) ZWO ASI224MC camera, ZWO ADC, Televue 2.5x Powermate

An Atmospheric Dispersion Corrector (ADC) helps counteract the refraction of light as it passes through our atmosphere, the amount of refraction depending on the altitude of the object you are imaging and the wavelength of light. A correctly adjusted ADC placed between the camera and image amplifier such as a Barlow lens or Powermate will greatly reduce the spectral spread caused by the atmosphere improving the resolution of your images. An ADC applies the opposite amount of dispersion to that caused by the atmosphere, re-converging the light from the different wavelengths at the focal point of your scope, doing this via a double prism.

Using ADCs used to instil fear in the hearts of many astrophotographers as ensuring the ADC was level with the horizon and the prisms were correctly aligned could be a challenging task, however software has come to the rescue. I use SharpCap as it is the application I am most familiar with but FireCapture also has a feature to assist in aligning your ADC so use whichever you are most happy with. The screenshot below shows the SharpCap ADC alignment feature where all you have to do is move the prism arms relative to each other to a point where you get the minimum values against the red and blue lines (0.4 in this case). The length of the lines should also be as small as possible. Once you are happy you are good to go!

ADC alignment feature in SharpCap

As you are now ready to start imaging, this might be a good point to briefly discuss cameras. I have a ZWO ASI224MC camera which produces results I am really happy with, the ZWO ASI482MC is also gaining a lot of popularity and I have seen some superb results from it. Recently Damian Peach reviewed the Player One Saturn M-SQR and was very positive about it, with, as you would expect, fantastic results.

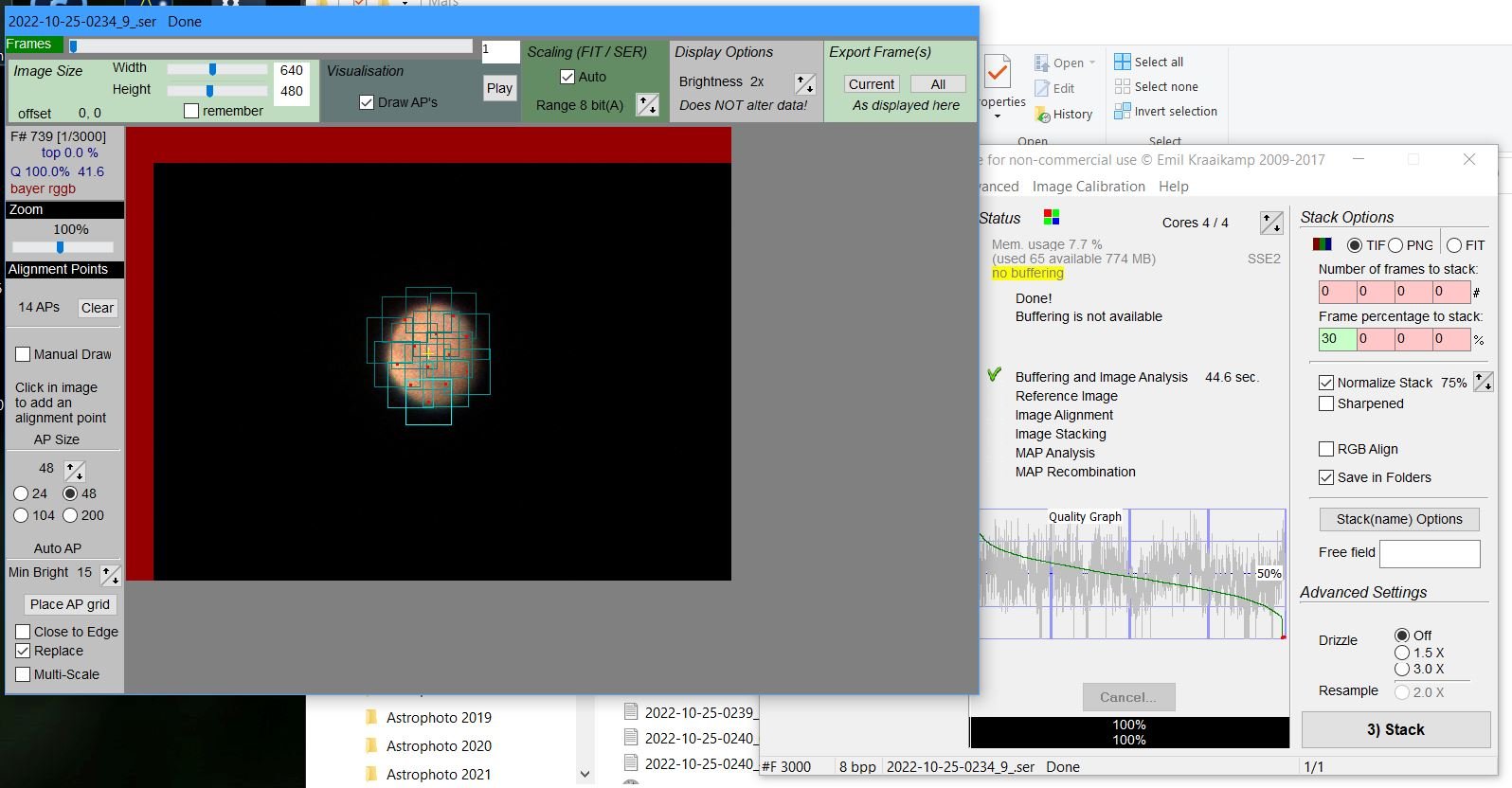

The image below shows the SharpCap imaging screen, as you can see this time I am imaging Mars and conditions were pretty decent.

Mars imaging with SharpCap

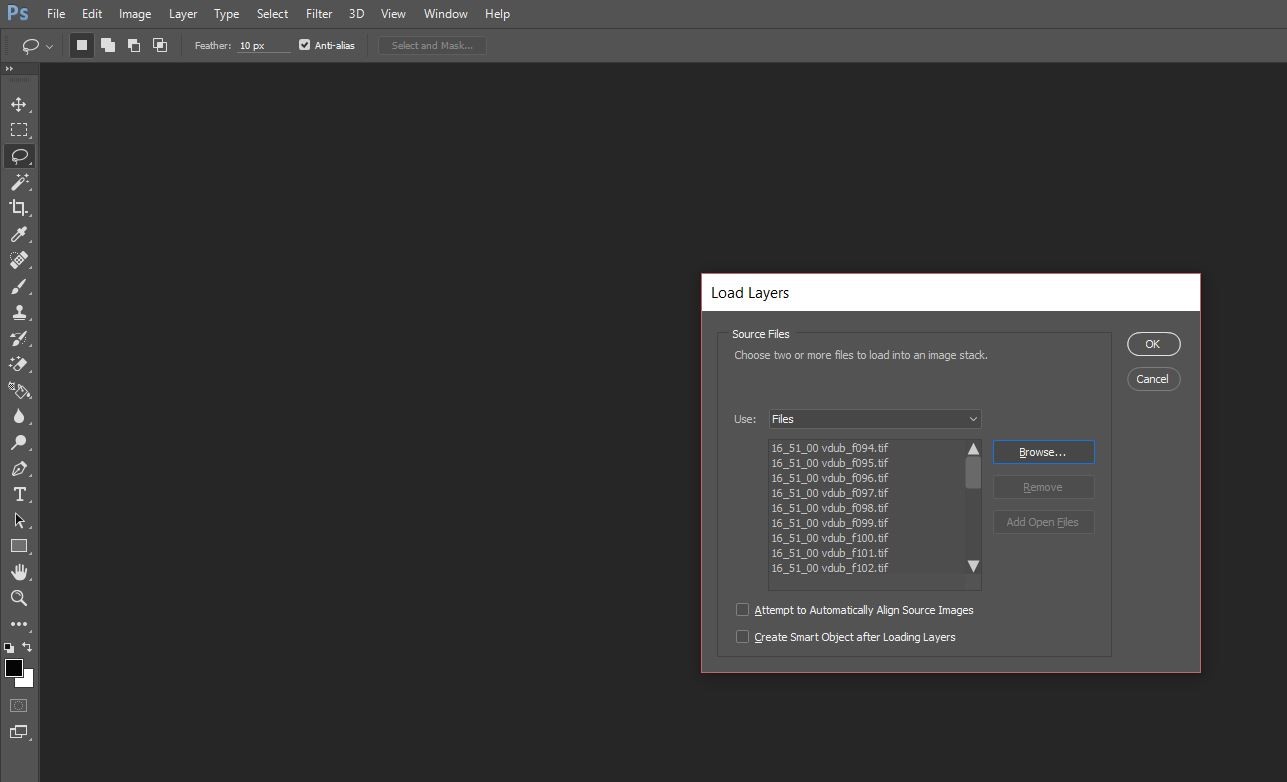

As imaging is a much quicker process with an OSC camera I tend to take a longer sequence of shots. In the example below I’ve shot ten video files for stacking and sharpening.

Processing Your Images

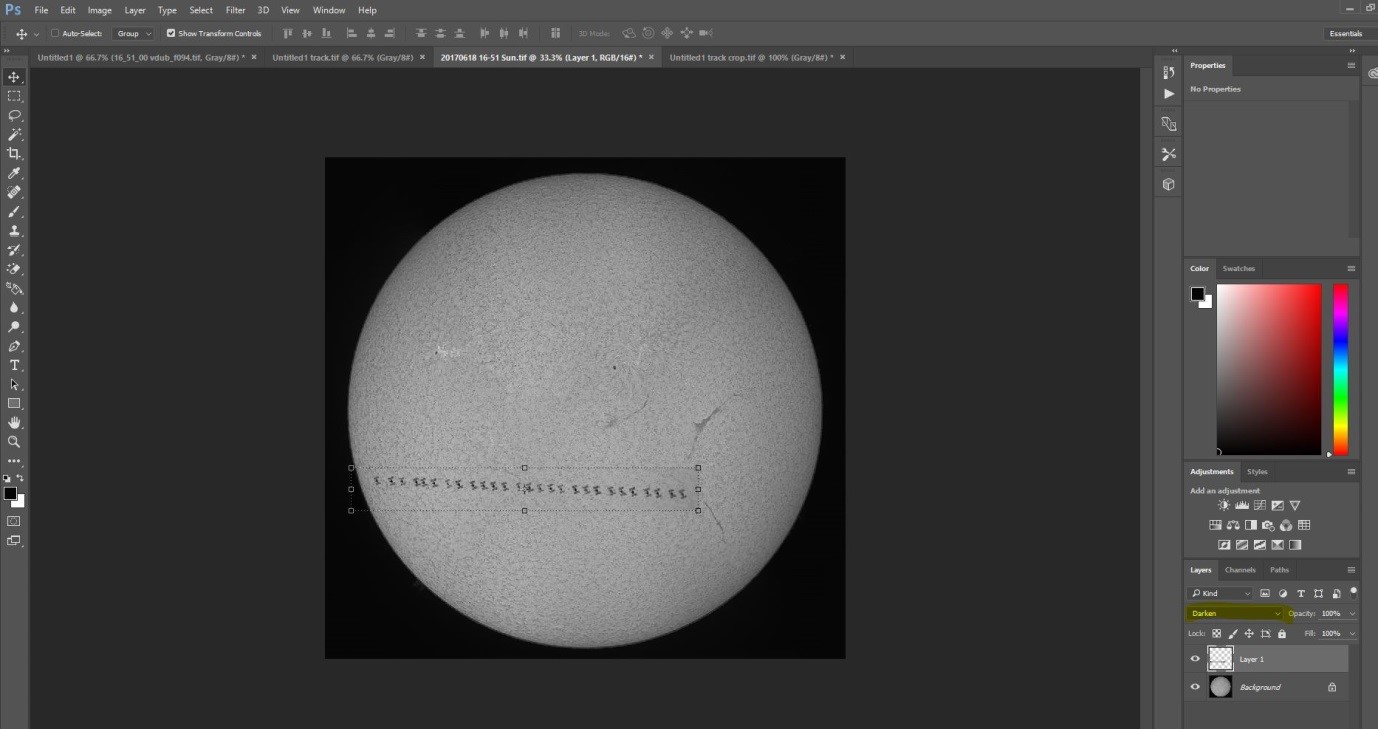

Stack each video in AutoStakkert!3 as described in previous posts, once you have stacked your first video you can drag the remaining files into the application and just hit stack - go off, have a drink and come back to a set of stacked files which you can then sharpen in Registax.

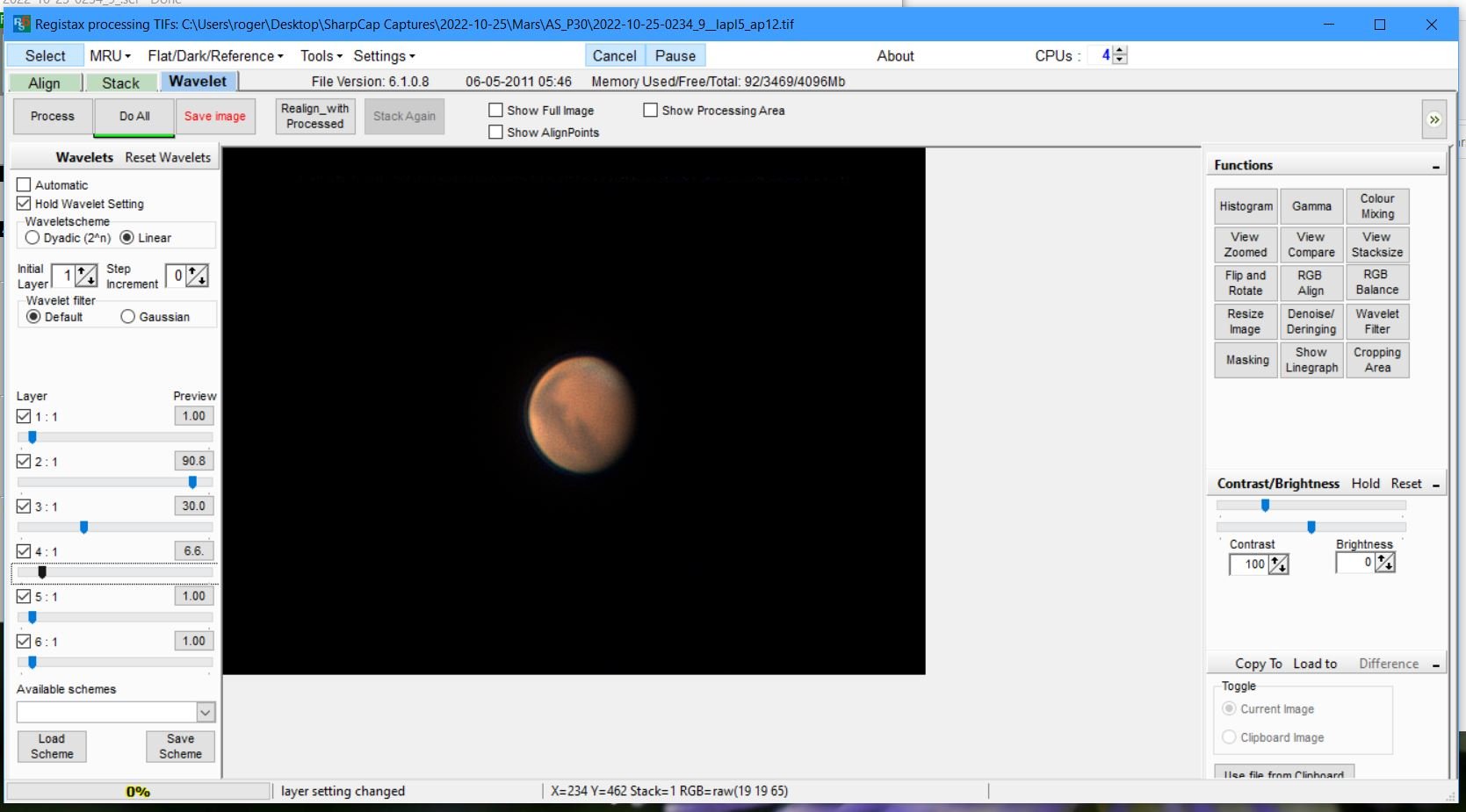

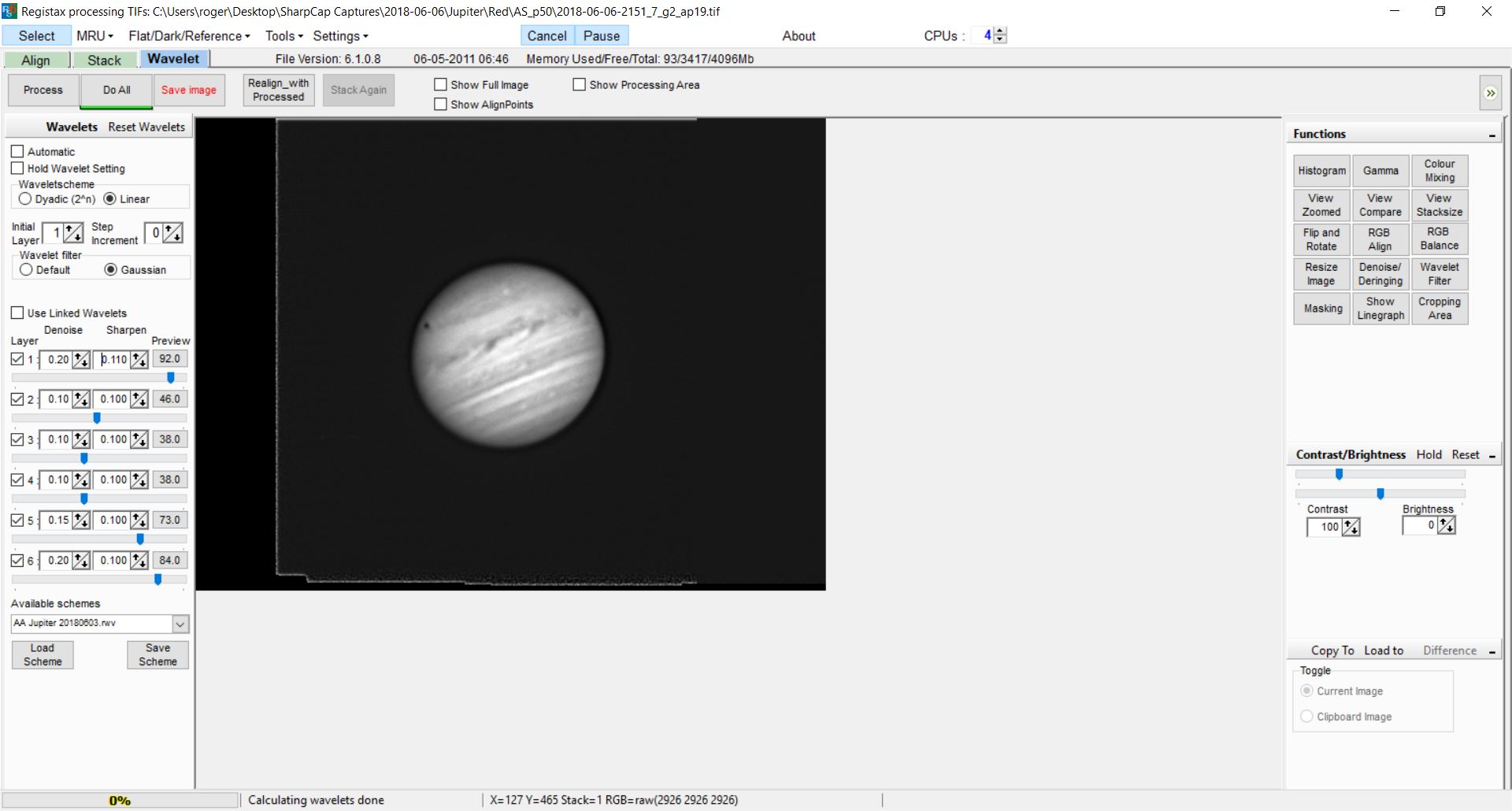

Each stack needs to be sharpened as shown below, the amount of sharpening depending on the individual file, generally if you have a set of stacks shot within a brief time period they will all require a very similar amount of sharpening so you can select the Hold Wavelet setting which will apply the same sharpening to each file you open, you can then adjust accordingly.

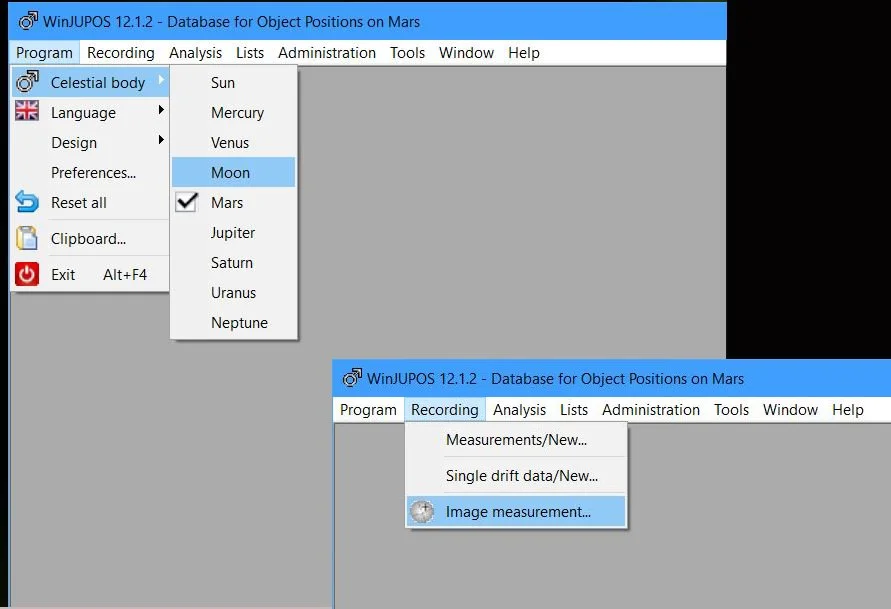

The next step is to de-rotate you images in WinJupos, a big time saver is to ensure you are generating your files with WinJupos format file names in SharpCap or FireCapture. When you sharpen in Registax save a copy of the sharpened file with the stacking details removed e.g. 2022-10-25-0234_9__lapl5_ap12 would be saved as 2022-10-25-0234_9 - this will enable WinJupos to set the correct timestamp details for the image without you having to set them yourself. Make sure you select the correct celestial body and then choose Image Measurement as shown below.

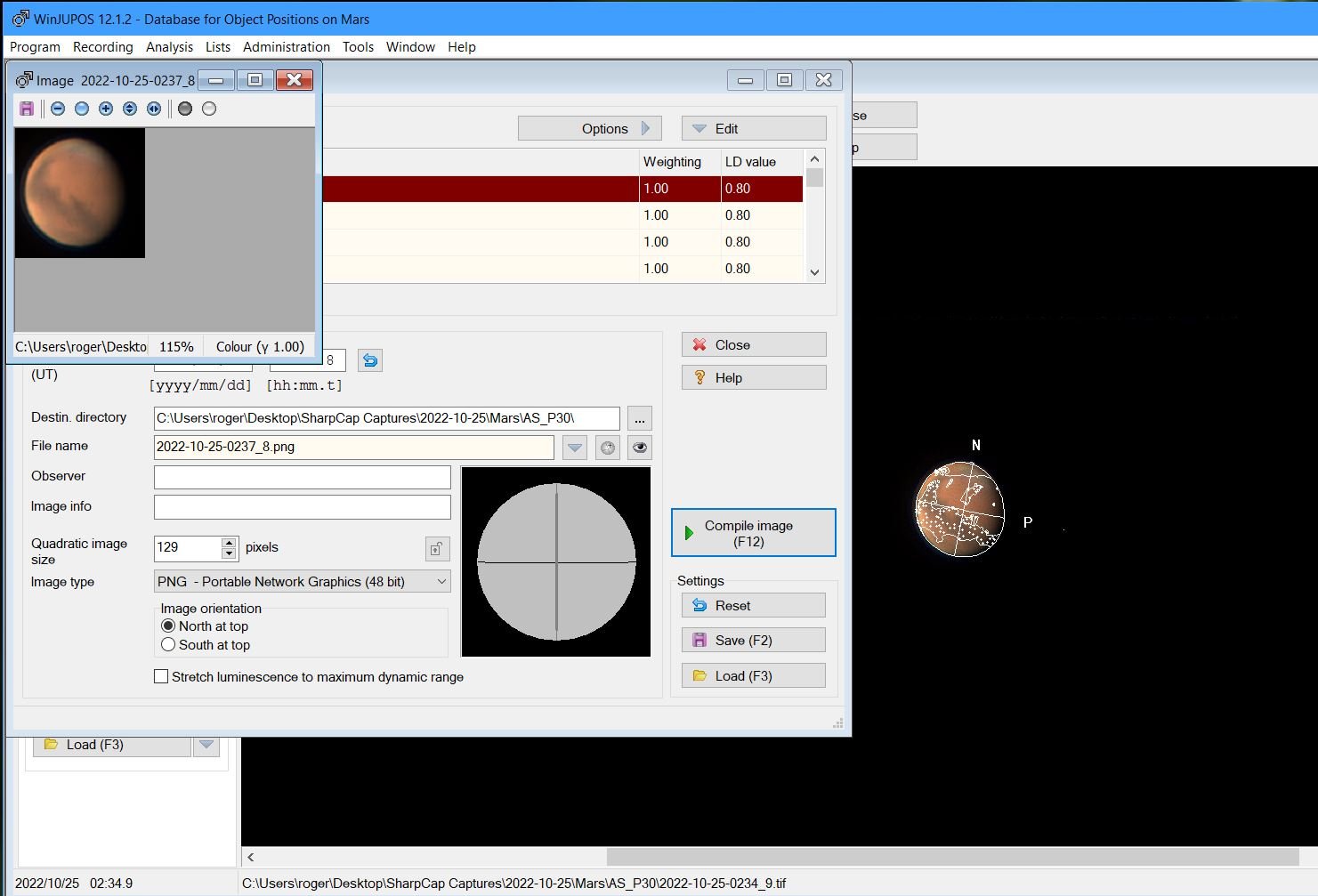

Once you’ve opened the first of your processed images you will see that the details from the file name will be populated in the date and UT fields, you will have to set your longitude and latitude which you can easily get from an app such as the compass on your iPhone. Next select the Adj. tab to ensure that the frame is correctly placed on the planet, in this mode use the arrow keys to move the frame over the planet, use the page up and page down to alter its size and N & P to move the frame clockwise and anti-clockwise. You can chose the additional graphic as shown below which helps with placement.

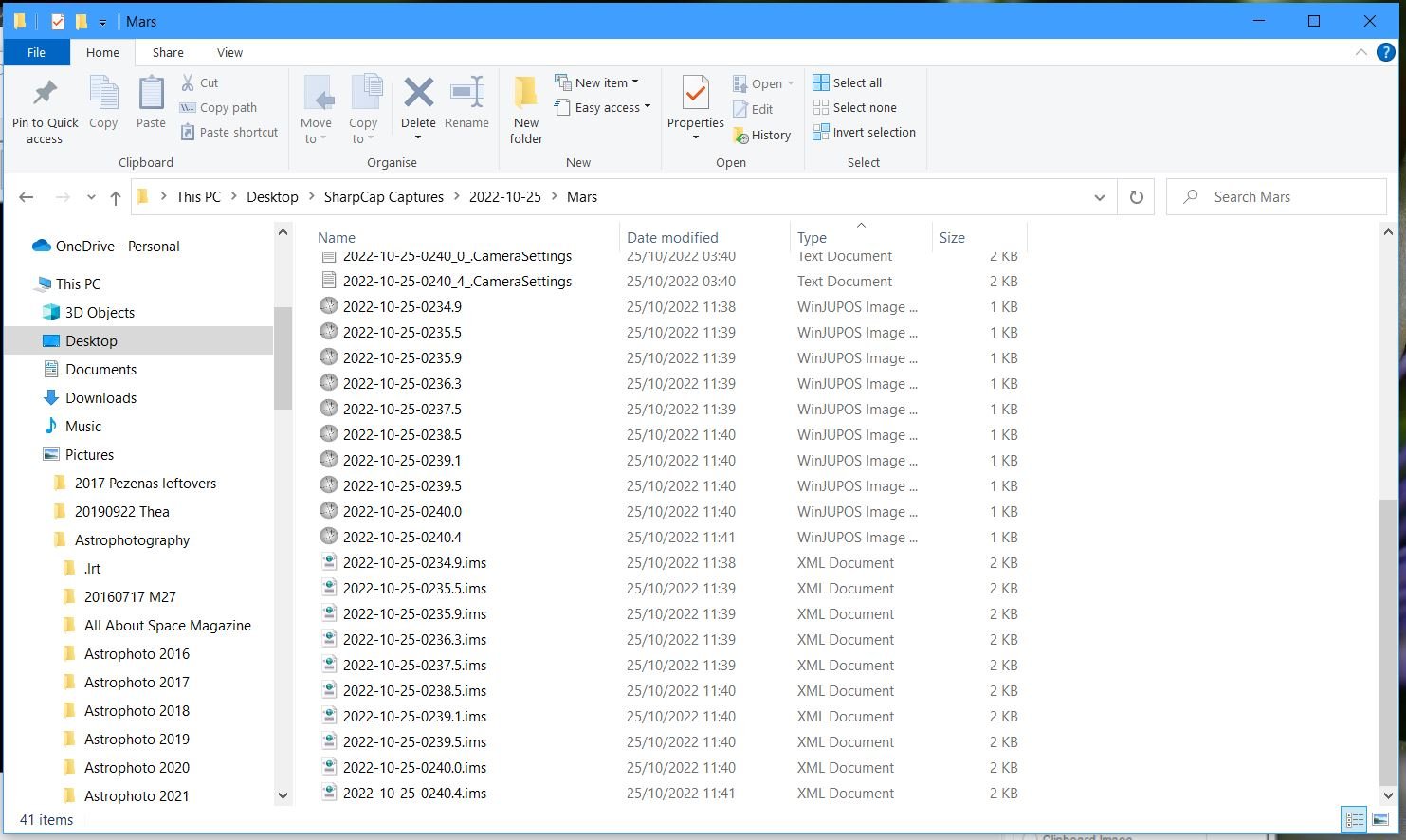

Once happy save the file and repeat this for each image, adjusting as required - the resulting files shown below.

Next you need to de-rotate your images into a single stack. Add the image files and change the LD value, I use 0.75 for Jupiter and 0.80 for Mars, but you can experiment, then hit Compile Image.

The compiled image when generated appears in a pop up as shown below.

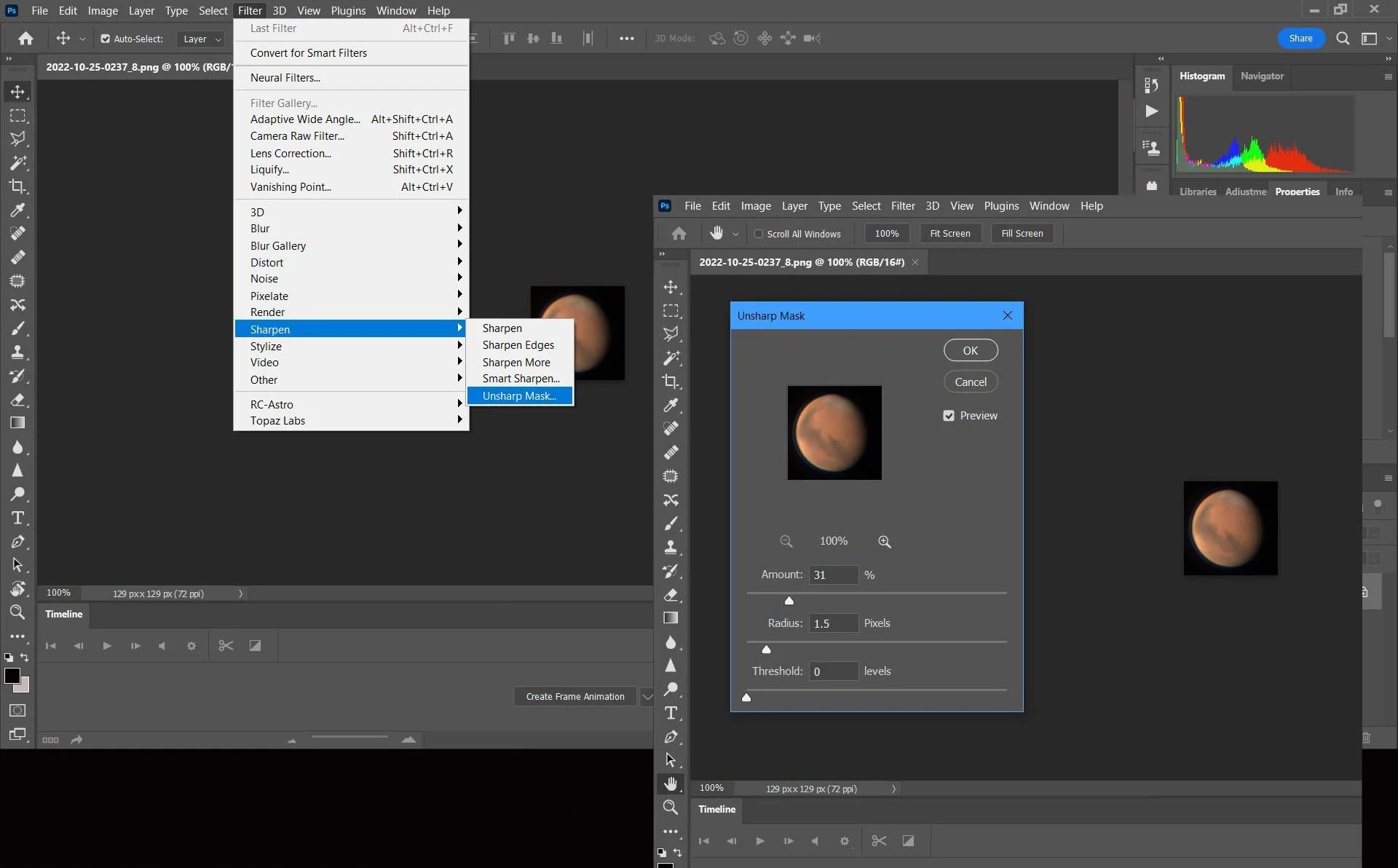

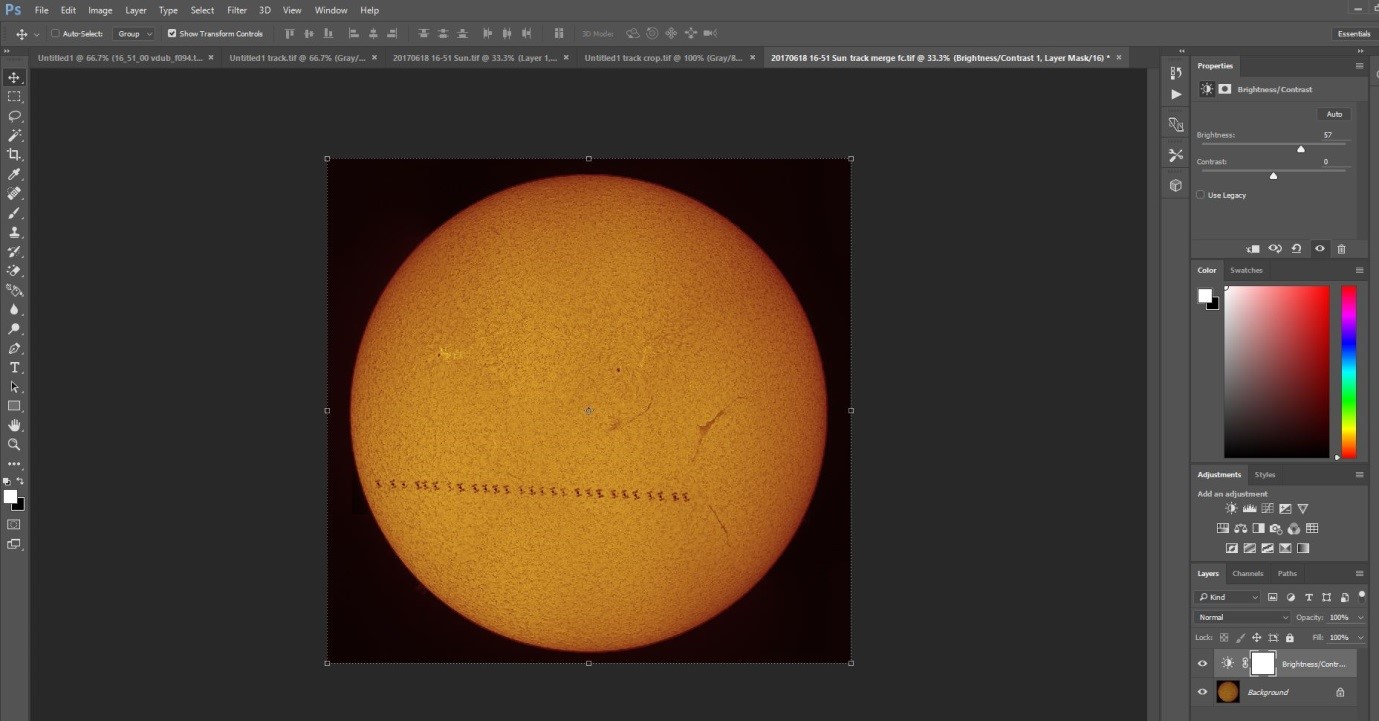

You can do a little more sharpening of the result in Registax, only a tiny bit as over-sharpening will spoil your image. You can now do your final processing in PhotoShop. In the image below I’m doing some additional sharpening

You can also perform a despeckle and have a play with contrast to bring out the albedo features on objects such as Mars.

As a final step when a target is relatively small such as Mars a few months prior to opposition I like to increase the space around the planet purely for aesthetic reasons as shown here. Extend the area using crop, match the colour to that around the planet and then fill in the white space using the brush tool.

Once you’ve completed the steps above you should get something like the result below, then sit back and enjoy all that extra time you’ve gained!

Creating An HDR Image Of The Moon

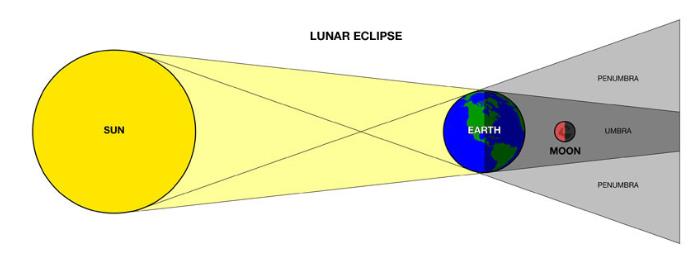

If you gaze up at the Moon a few days before or after new you will often see the beautiful glow of Earthshine, caused by sunlight being reflected from the Earth onto the unlit portion of the lunar surface.

Our eyes can discern this relatively easily as they can cope with the huge differential in brightness (dynamic range) across the lunar surface, cameras however have a much harder time dealing with this. If you expose correctly for the lit portion of the Moon you will have no chance of picking up the faint glow of Earthshine, if you expose for Earthshine then the lit portion of the Moon is completely overexposed. Here I’ll take you through how to create beautiful HDR images of the Moon in Photoshop using simple techniques so that hopefully you will be able to produce images like (or better than) the ones in this post.

First Shoot the Moon!

The first thing to do is to take your images of the Moon, you’ll need to take two exposures one with the crescent correctly exposed like the image on the left, and a second exposed for Earthshine (image on the right). You can see that the earthshine image has lost all crescent detail, this is what we are going to retrieve with this technique. The technical details are below the image, I’ve kept it simple here just using a camera and telephoto lens mounted on a standard tripod. You could also shoot through a telescope on a tracking mount - you just need to be sure you can fit the whole lunar disk in the field of view.

Left image 1/80 sec @ ISO1600, right image 1.3sec @ ISO1600. Both images shot using a Canon EOS 6D with Canon EF 70-300mm f/4-5.6L USM IS Telephoto lens at 300mm with 2x Extender

Processing Your Images in PhotoShop

Process your individual images in Photoshop (I’m using PhotoShop CC) as normal, flatten the images and save, then open your crescent image and paste the earthshine image on top of the crescent as a layer (shown here as Layer 1).

Select the Earthshine layer and change its opacity so that you can see the crescent below it. You can then use the Move tool to place it correctly over the crescent.

Change the opacity of the Earthshine layer back to 100% and click on the layer mask icon - shown as the white rectangle with dark circle in the image below.

With the layer mask selected (make sure you’ve clicked on it), select the Gradient Tool (shown below)

With the gradient tool select the edge of the lunar disk in the Earthshine image and drag your cursor towards the lit portion as shown below.

When you let go of the cursor you’ll see something like the image below, you can undo and repeat the process a few times until you get the effect you want. Don’t worry about the obvious sky gradient - we’ll deal with that.

In the image below I’ve used the Magic Wand tool with a setting of 3 by 3 average and tolerance of 5 to select the lighter sky background, you’ll have to adjust these to your particular image. I’ve also feathered the selection by ~50 pixels.

Adjust the levels of the selected area so that it better matches the sky background of the lit crescent image.

A little to and fro will result in a nice even sky background with the crescent Moon and Earthshine both shown

The image below is the result, you can clean it up a little more, reduce noise and sharpen etc. but I wanted to show the result simply of applying the technique described above.

You can adapt the approach above for larger crescents like the one at the top of this post. For that image I created the composite image as described but then pasted it on top of the Earthshine image reducing its opacity until details were still visible on the lit portion but the overall effect was of a bright Moon with the light sky background of the Earthshine shot. I then used the eraser with the pasted image selected tool to carefully work around the lunar limb to blend the two images - a bit more work but I think it gives a great result. Good luck and make sure you share your results with me on Twitter or Instagram (@thelondonastro)!

Imaging the Planet Mars

Mars - 12th September 2020

With Mars reaching opposition on the 13th October this year (2020) now is the time to get imaging ‘The Red Planet’ while it is at it’s biggest and brightest for many years to come.

Mars has been a great target since early August and this will continue until December at least, in fact November and December offer the best times to view the planet if you don’t like late nights or early mornings, especially if you are not an obsessive like me who doesn’t mind getting out of bed at 3 am to view and image the planet in the months before opposition!

This post will hopefully give you a few tips on imaging this beautiful planet from capture right through to final processing, hopefully you’ll find it useful.

The Imaging Train

The information in this post relates to my setup which is a Celestron Edge HD11 scope, Televue 2.5x Powermate & ASI174MM camera. Filters mentioned are Baader 685nm IR and ZWO R,G,B. Images are captured using filters and a mono camera, colour images being created in PhotoShop CC. Software used is AutoStakkert 3, Registax 6, WinJupos (all free for non commercial use) and PhotoShop Creative Cloud.

The picture above shows my imaging train for planetary work. From top to bottom it shows Televue 2.5x Powermate, ZWO ADC (I tend not to use it for Mars when it is riding high in the sky as it is at the moment so would typically remove it, when using I remove the flip mirror), flip mirror & eyepiece, filter wheel, ZWO ASI174MM camera. Top of the picture shows the electronic focuser. I’ll go into a little more detail on the purpose of each of these below:

Focus - It goes without saying that if the data you are capturing is not properly focused the end result, no matter how much post processing you do, will be disappointing. When imaging at long focal lengths it can be a challenge to achieve that optimal focus especially if you have to touch the telescope to turn the focusing knob. An electronic focuser really helps as it enables you to focus without touching the telescope and makes finding fine focus relatively easy. I can’t recommend getting one enough if you are serious about high resolution imaging. For Mars focus on the bright limb of the planet and achieve fine focus on the albedo features. Remember you will need to re-focus after each filter change, even if you believe your filters to be parfocal.

Image Size - When imaging any planet you want it to occupy as much of your chip as possible so image amplification is essential. I use a 2.5x Televue Powermate which increases the focal length of my setup to 7000mm providing a decent image scale when paired with my Celestron Edge HD11 scope.

Filters - As I’m imaging using a mono planetary camera I have to place filters in front of it to ultimately achieve a colour image. The filters I use for Mars are 685nm IR, Red and Blue (more on this later!)

Camera - For best results a high frame rate planetary camera is a must, I would recommend a mono camera for best resolution but some colour cameras do produce good results and are easier to use but perhaps at the sacrifice of versatility & resolution.

Capturing Your Data

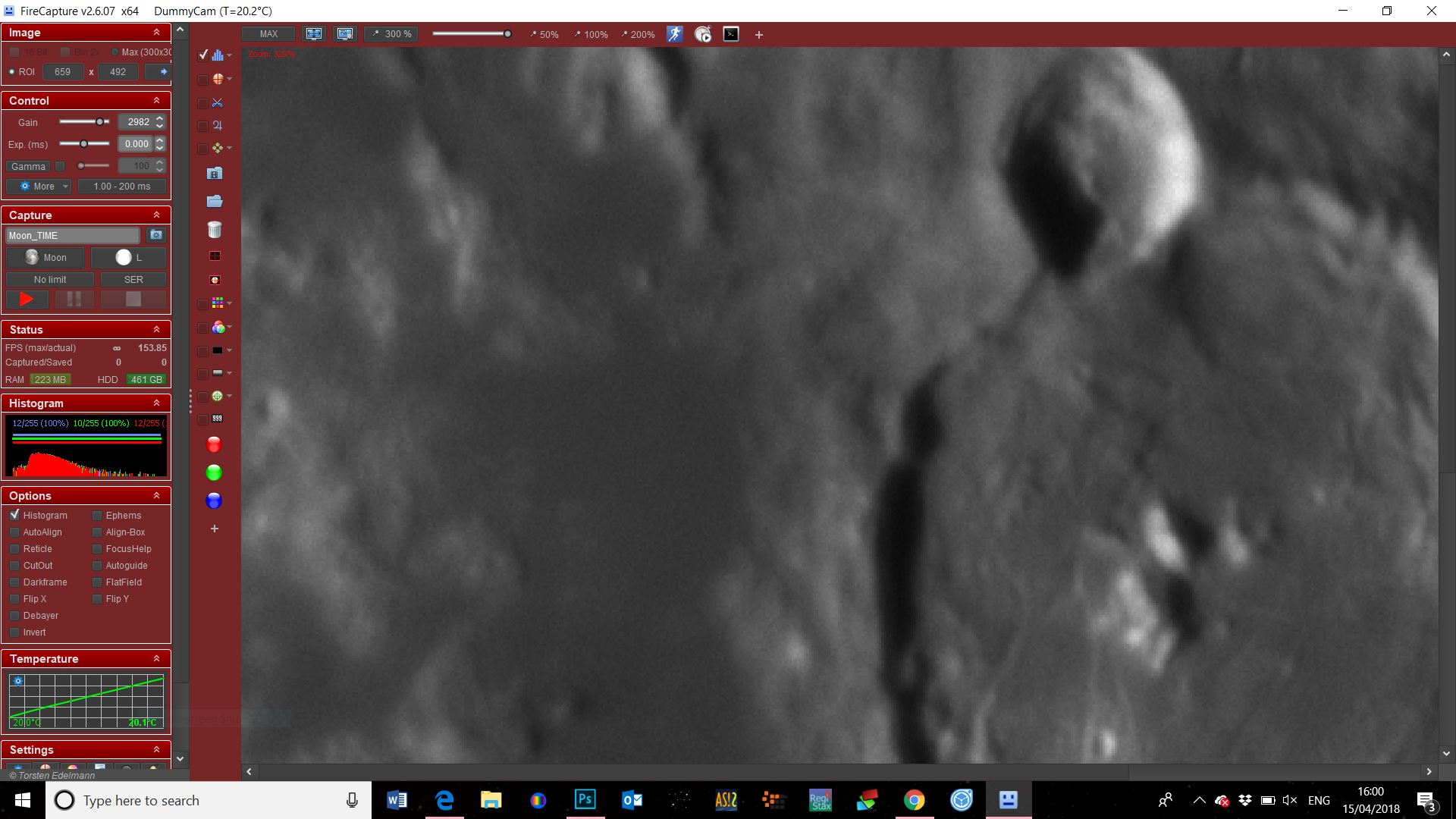

For image acquisition I use SharpCap Pro but you can also use FireCapture which is many people’s preferred capture software, either of these does a good job. I started off my planetary imaging journey using SharpCap and feel most comfortable with it so haven’t felt the need to change.

How much data should I capture? At the moment Mars is an absolute joy to image for us northern hemisphere astrophotographers, it is at a good altitude and bright, something we have been starved of over the past few years with both Jupiter and Saturn skimming our horizons! A benefit of this is that you can capture lots of data in a very short period of time. I aim to image five sets of 4000 frames through IR (optional), Red and Blue filters, you can also image through a green filter but I like to create a synthetic green channel (more in the final processing section). Once done you will have generated 20,000 frames through each filter, 60,000 in total or 80,000 if you are also shooting a green channel! Sounds a lot but you can capture all this within 10 minutes.

What settings should I use? Everyone’s setup is different so settings will differ as well, however I generally aim for:

Histogram at ~50% (25% for blue channel)

Gain at ~50%

Frame rate around 150 fps

Obviously each of these influences the other but as we are capturing so much data you can use a reasonably high gain setting to get yourself within the other parameters I’ve stated.

Capturing Mars under reasonable seeing using SharpCap - 685nm IR Filter

Below you can see the .ser files produced by the imaging run shown above. SharpCap creates a directory on your desktop called ‘SharpCap Captures’ and creates sub-directories dependent on the date on which you are imaging. In addition if you provide a name for the target you are shooting it will also create a sub-directory with that name, in this case Mars. All this is configurable in the application as well as the file naming convention you wish to apply to your data files, make sure you choose WinJupos format as this will save you some time later.

Below you can see five .ser files have been created for the IR data, I then changed filters, re-focused and generated five for the red data and same again for the blue. As the whole imaging run only took sseven minutes I captured each set as a batch to minimise filter change and re-focus time.

Now the data has been captured you are ready to move on to the processing stage.

Stacking and Sharpening the Data

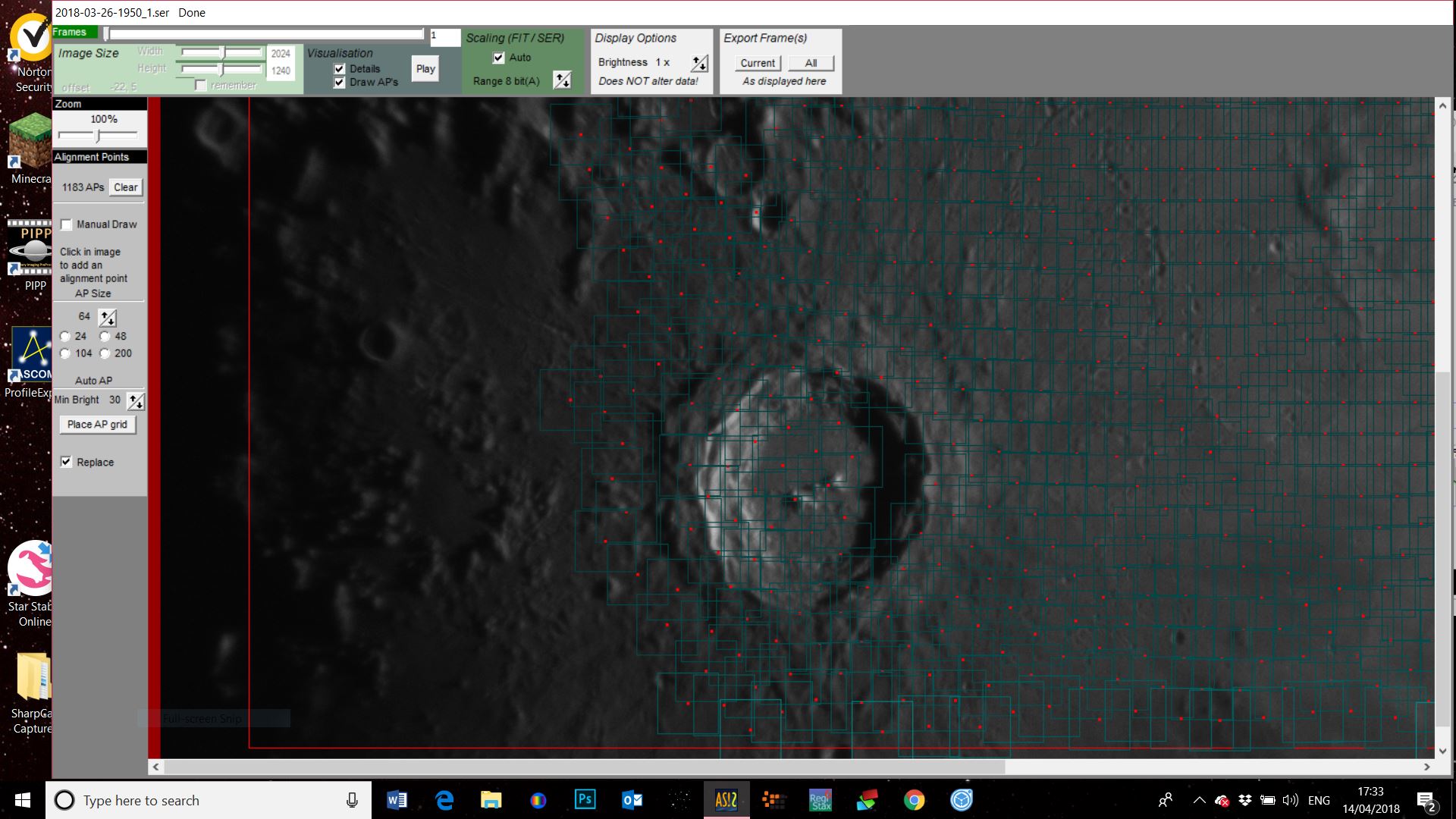

The first processing step is to stack the videos you have captured, to do this I recommend using AutoStakkert 3. The image below shows one of the captured .ser files open in the application, it shows how I would normally set the various options on the screen. The only item I may change is the frame percentage to stack as this will vary depending on the quality of the input video. I try to keep it around 50% but always perform an analyse on the image first to confirm this is OK, if not I’ll reduce the percentage accordingly. Another point to note is that you don’t need masses of alignment points, here I just have 9 selected by the software with multi-scale checked. A large number of small alignment points can in fact often produce a much worse result.

Once I’m happy everything is set correctly I will stack the first file, once this is complete I’ll select the others in file manager and simply drag them onto the open AutoStakkert window and hit stack again. This will process the other files as a batch using the same parameters as the first video - at this point you can go off and have a coffee!

AutoStakkert will create a sub-directory within the directory holding the videos as shown in the image below. The name relates to the percentage of frames you stacked, or the number of frames depending on the stack option selected.

These files are now ready to process in Registax. Before I start processing I create a ‘Processed’ folder within the stacked images folder, this is where I will save the sharpened images.

You can see here one of the stacked IR videos with sharpening applied - don’t take the setting shown here as the ones you should use as this is totally dependent on the dataset you are processing, however make sure you don’t over sharpen - less is more!

I repeat the sharpening process for each of the 15 stacked videos, you’ll find the wavelets you’ve selected for IR and Red filtered images will remain pretty consistent. For the blue images you can be a little more aggressive with your sharpening as this is where you will be capturing any cloud detail.

Each sharpened image is saved in the ‘Processed’ directory with the AutoStakkert suffix removed as shown below - this enables them to be imported into WinJupos for the next stage of processing.

De-rotation in WinJupos

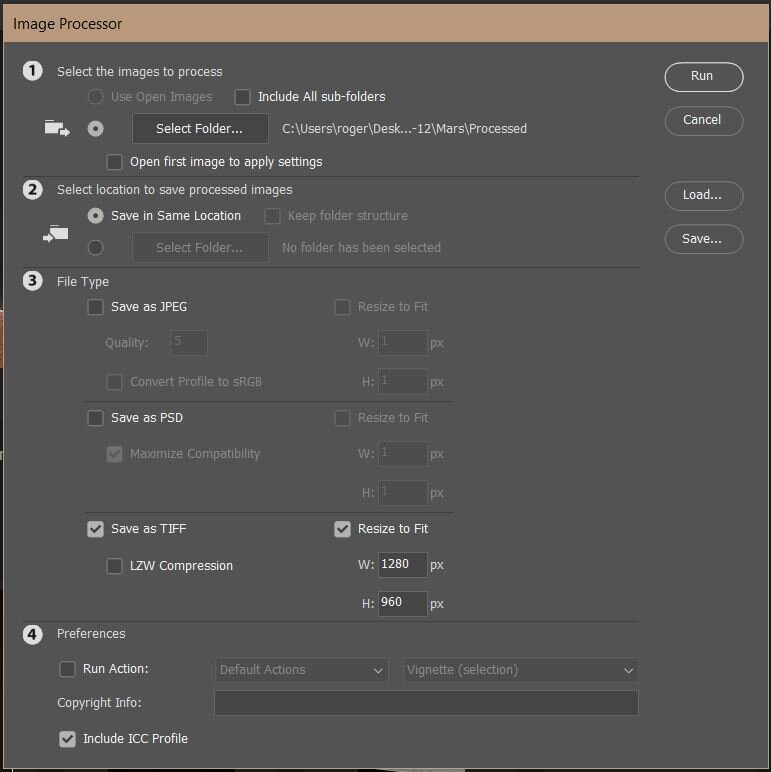

Before we begin the WinJupos magic remember what I said about image scale? I shot the videos using a capture area of 640x480 as the planet will fit into this area and reducing the area captured also means you can achieve higher frame rates. Before loading into WinJupos I increase this by 200% using the [File] [Scripts] [Image Processor] menu item in PhotoShop CC. In the image below you can see that I have selected my ‘Processed’ folder, am saving as a TIFF file and have used ‘Resize to Fit’ 1280x960, doubling the image size.

Hit Run and PhotoShop will create yet another sub-directory called TIFF where the re-sized images will be saved, these are the ones we are going to load into WinJupos and process further.

People can be a little wary of using WinJupos but really there is nothing to fear, I’ll start with a little refresher.

Make sure you choose the correct celestial body (Menu item [Program] [Celestial Body]), in this case Mars.

Then choose Menu items [Recording] [Image Measurement] and open the first image in the folder containing the resized images. The file name you saved your images as earlier will automatically set the date and UT values, you may have to input your longitude and latitude.

Next choose the Adj tab and use the Up/Down/Left/Right keys on your keyboard to navigate the outline frame to the planet. Make the frame larger using Page Up and smaller by using Page Down and rotate it to the correct north south orientation using the N & P keys.

A useful feature for Mars is that in the Adj tab you can display the outline frame with additional graphic which adds the main features visible at the time your image was taken. This serves as a really useful way of correctly aligning the frame.

Another feature that is useful in aligning is the ‘LD Compensation’ check box this will show the hidden edges of the planet for fine tuning.

Once happy deselect the LD compensation and go back to the ‘Imag.’ tab. There I input the filter used in ‘Image Info’ as this helps when compiling the final image - you should also record the channel in the ‘Adj’ tab.

Click F2 or the Save button to save the Image measurement file, I create yet another sub-directory to save these in - shown below. Repeat the process for all the sharpened images making any fine adjustments along the way - you will then end up with five image measurement files for each filter you shot.

Now to de-rotation!

Choose menu Item [Tools] [De-rotation of images]

Click on Edit and select Add, you then select all the image measurement files for one of your filter sets. You should have five files.

Change the LD value to around 0.80, otherwise you may get unwanted artefacts.

Select the destination directory (I’ve chosen my ‘Processed’ directory)

Make sure you have the correct north south orientation and hit Compile Image (F12) - this will create your de-rotated image for the selected filter set.

Repeat this process for all the other filter sets you have generated.

Here are the de-rotated IR, Red and Blue images. This is my final step in WinJupos but if you shot a set of green filter images you can then choose [Tools] [De-rotation of R/G/B frames] to construct an RGB image from your files (an image measurement file will have been automatically created for this purpose.

Processing Your De-rotated Images

You are now on the home straight, its time to create that synthetic green channel I’ve been referring to throughout this post.

First open the red and blue de-rotated images in PhotoShop and duplicate the blue image [Image] [Duplicate]

Copy the red image and paste it as a layer on the duplicate blue image

The red layer should be ‘normal’ and opacity should be changed to around 50%

Flatten layers and save as synthetic green. This is the image you will use as your green channel.

Below we can see the RGB image (top right) created by pasting the red, blue and synthetic green images into the relevant channels. Give this image a relevant name (RGB) and save to the directory you are storing your processed images in.

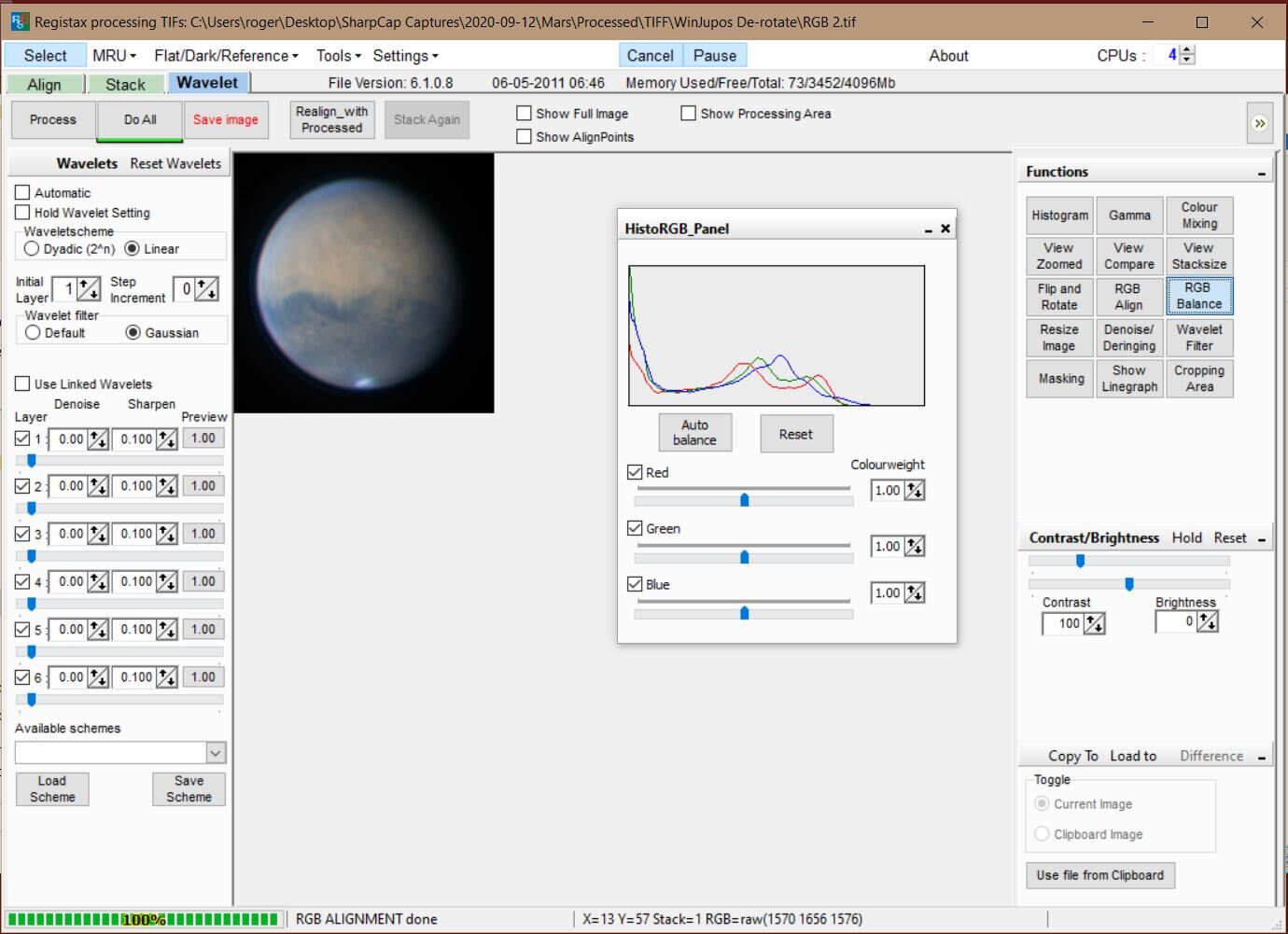

For the next stage we leave PhotoShop and go back into Registax. Here we will perform an RGB align and balance. First open your RGB image and choose RGB Align from the functions menu. Make sure the green box covers the whole planet and then click Estimate.

Next select RGB Balance and click Auto Balance, once done remember to click ‘Do All’ and ‘Save Image’

You now have completed processing on your RGB image open it in PhotoShop along with your IR image. There is a bit of debate as to whether you should use an IR or Red image as a luminance layer when imaging Mars, but I think it gives better results for me when imaging from my city location.

Paste the IR image as a layer over the RGB and select luminosity - Save this as a new image named IRRGB.

You can then perform some final processing on the image, I tend to increase saturation slightly as applying the luminance tends to slightly wash out the image. I also may adjust contrast and colour balance as with the image below and perhaps perform a little more sharpening with an Unsharp mask and some additional noise reduction.

You can perform some final tweaks if you want to improve the aesthetics of the image, below I’ve used crop to increase the black space around the planet and tilted it using Free Transform. Once you’re happy flatten layers as necessary and save the final image. You’re done - well done for getting to the end and happy Mars season!!

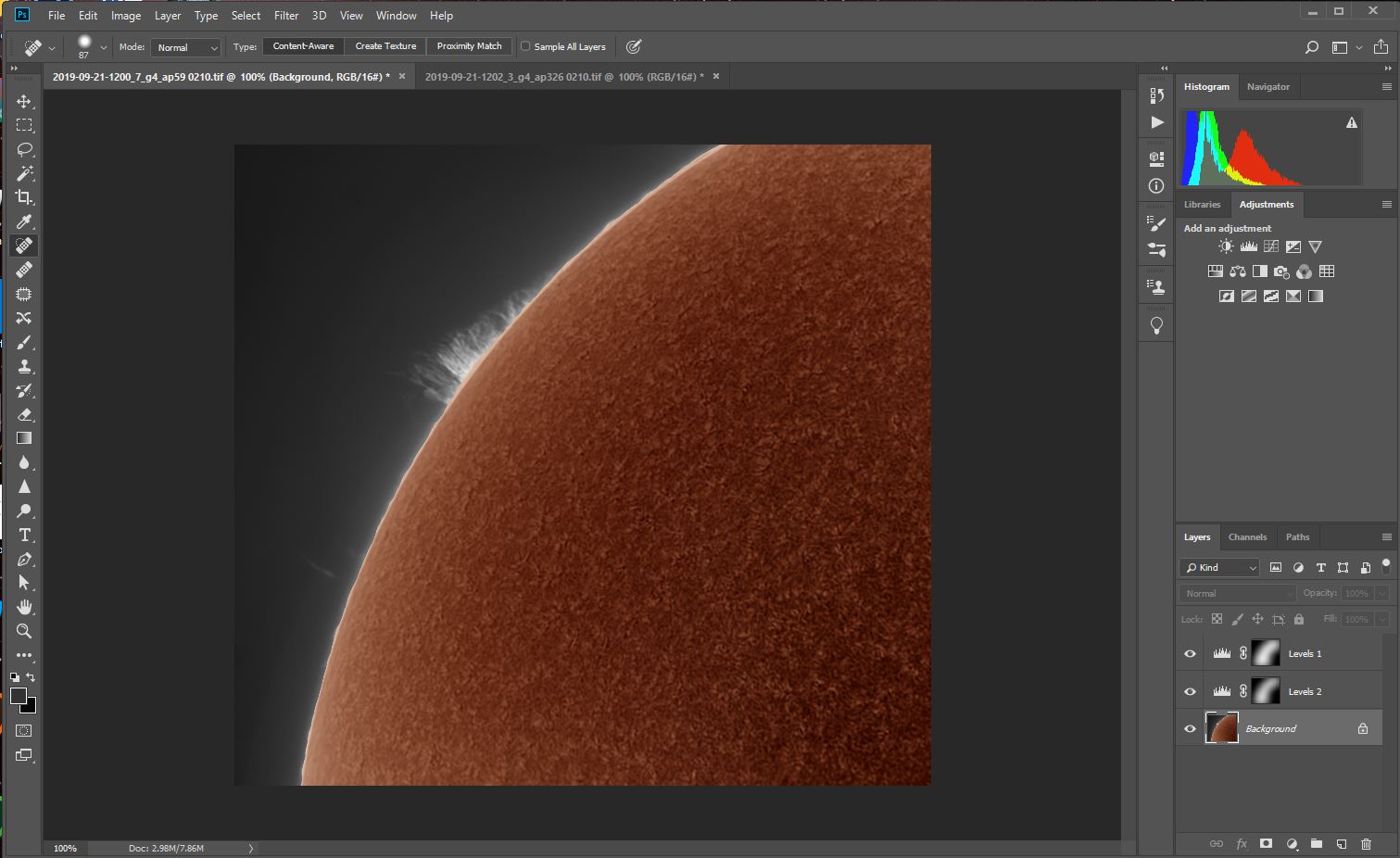

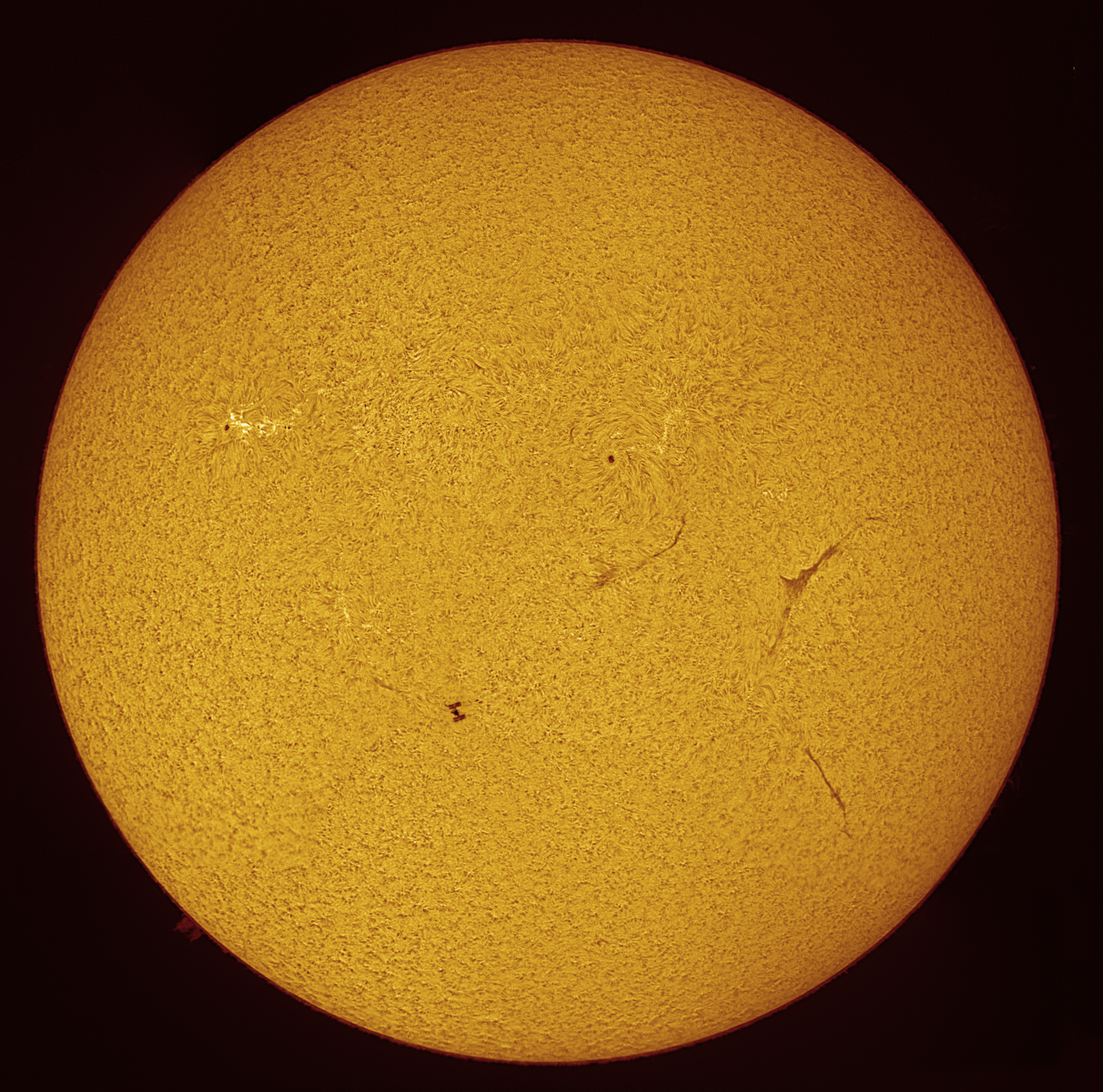

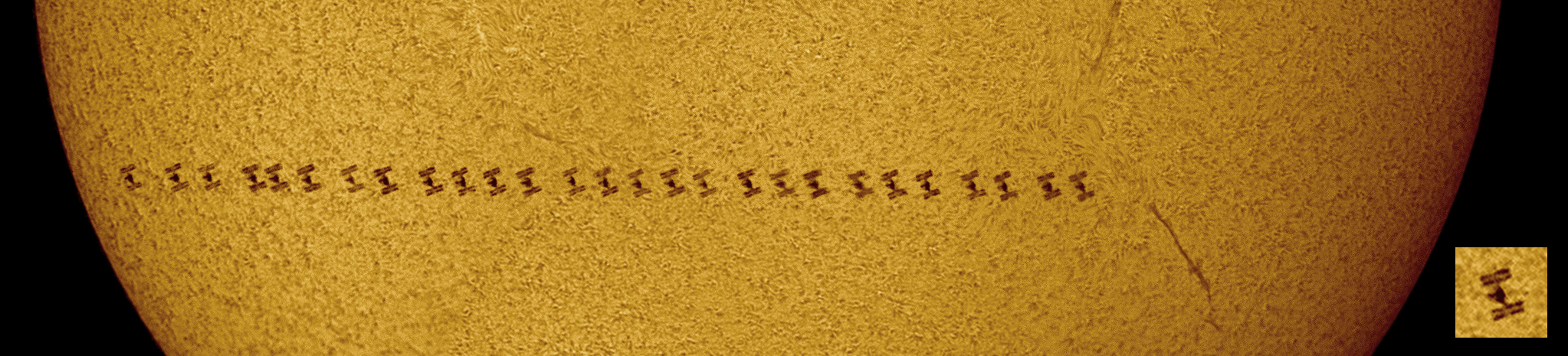

Detached Prominence - by Thea Hutchinson, runner up Young Astronomy Photographer of the Year 2020

Astronomy Photographer of the Year 2020

The month of September always means the announcement of the winners of Insight Investments Astronomy Photographer of the Year, an annual competition showcasing the world’s greatest space photography. This is the competition’s 12 year, with over 5000 entries being submitted from 6 continents.

This year of course things were a little different, the usual awards ceremony at the Royal Observatory Greenwich and latterly the National Maritime Museum being replaced by a virtual event.

The ceremony is normally a great opportunity to catch up with old astrophotography friends as well as connect with other like minded people from across the globe and I’ve been lucky enough to attend four times, this year would have been the fifth through the efforts of my daughter Thea. Sadly this was not to be, due of course to Covid-19, but Royal Museums Greenwich did a fantastic job presenting the awards remotely Jon Culshaw and Emily Drabek-Maunder hosting the ceremony which can be seen here and I’m sure you’ll agree the very best was made of the terrible situation we all find ourselves in.

Thea’s image ‘Detached Prominence’ was awarded runner-up in Young Astronomy Photographer of the Year, which made her very happy and her parents very proud!

The winning images are always widely reported in the world’s press, a simple search will return lots of results but here are a few links to coverage by some of the UK’s national newspapers and broadcasters, The Guardian , The BBC , London Evening Standard, Google News

False colour solar composite

Creating False Colour Images of Solar Prominences

Lately I have been experimenting with the way I present images of solar prominences and have had a few enquiries as to the method used to produce them. Here I’ll give a step by step guide to my workflow, hopefully in a way that will help you to produce similar images yourself.

Capturing the image

Obviously before you can process your images you need to capture them, as this post is more to do with processing I’ll briefly summarise the main points:

Equipment - images were taken using a Lunt LS60T Ha solar telescope, Televue 2.5x Powermate & ZWO ASI174MM mono planetary camera.

When to image - generally when imaging the sun it is best to acquire your shots at the beginning of the day rather than later as the atmosphere is generally more stable.

Image Capture - you will need to capture two images which you will blend later.

First make sure you have achieve good focus, use the solar disk for focussing and once you are happy you can start the prominence hunt.

Crank up the gain and exposure in your chosen image capture software and scan around the solar limb for likely candidates. They may be fainter than you anticipate so if you don’t see anything first time around up the gain and search a second time.

Once you have found a prominence you want to image adjust the gain and exposure so that none of the fine structure is over exposed but the main features show up well on the screen. The solar disk will be very over-exposed - don’t worry about this.

I capture around 3000 frames, it may take a little while as you will be imaging at a relatively low frame rate but this shouldn’t impact image quality. I find it is best to keep gain at around 50% and shoot at a slower frame rate as the result will be less affected by noise.

Once the prominence is captured drop the exposure time/gain so that the solar disk is correctly exposed, the prominence will likely disappear at this point. Make sure you don’t move the scope and capture around 3000 frames, this will be achieved much more quickly than the previous image.

Now you have captured your video of the prominence and solar disk you can move on to processing your image.

Processing your Images

To process the images I use AutoStakkert!2 for stacking, Registax 6 for sharpening and Photoshop Creative Cloud for final processing, I’ll take you through each step below.

Stacking & Sharpening your images

Open the video exposed for the prominence in AutoStakkert, setting the image stabilisation anchor over the prominence

Once the image has been analysed the quality graph can be used to determine the percentage of frames to stack, I have chosen 60% in this example.

Place the alignment points along the solar limb, I place additional smaller points over the prominences.

The stacked image is then opened in Registax for sharpening, I’ve not aggressively sharpened as I find over-sharpening can lead to a very artificial result

Now perform the same actions for the images exposed for the solar disk, here we are setting the alignment point prior to analysing in AutoStakkert

Set the alignment points in AutoStakkert - here I have gone for a stack of 60% of the images captured

Now we apply sharpening in Registax, once done the images are ready for processing in PhotoShop

Processing Your Sharpened Images

Once you have opened the sharpened prominence image and solar disk image, select the image of the disk and invert

While still in the inverted disk image select levels and set the mid slider in the red channel to around 1.40

Set green to around 0.79

Lastly set blue to around 0.60. You can play around with the levels of each colour channel until you find something that you like. I’m trying to reflect in a subtle way the colours seen when viewing the Sun in Hydrogen alpha.

Next copy and paste the processed disk image onto the prominence image with blending mode set to darken

Nudge the disk until it correctly aligns to the edge of the prominence layer and adjust the levels as required on the image of the prominence (the background layer)

Crop the image so that the areas of interest in the image are best presented

Final cropped image with some adjustment of levels on the solar disk

The final result - happy solar imaging and processing!

Obligatory arrival selfie.

Insight Astronomy Photographer of the Year 2019

I had the great fortune of attending the Insight Astronomy Photographer of the Year awards ceremony again this year, but this time solely in the capacity of a guest of my daughter Thea, whose image of the planet Venus was highly commended in the Young Astronomy Photographer of the Year competition. Here are a few photos from the event.

Drinks reception in the entrance hall of the National Maritime Museum Greenwich

© National Maritime Museum, London

All set for the ceremony

© National Maritime Museum, London

Thea receiving her award from Jon Culshaw

© National Maritime Museum, London

The exhibition ready for guests - you can see myself and Thea on the big screen

© National Maritime Museum, London

Here’s a closer view of that film!

Thea by her winning image in the exhibition

The winning image (nicely overexposed!) as shown in the exhibition

The exhibition up and running

Thea was also lucky enough to be the subject of one of the short films which play within the exhibition space. I also feature in a rope pulling and pointing capacity. Here is the link to the film from the RMG website

https://www.rmg.co.uk/whats-on/astronomy-photographer-year/galleries/2019/young-competition

Finally here is Thea’s winning image

Thea’s highly commended image - ‘Daytime Venus’

Is it time to replace the blue glass in your Lunt solar scope?

If you are the proud owner of a Lunt Ha Solar telescope and have noticed that the amount of detail you are seeing through the eyepiece or when imaging is not what it once was, never fear you aren’t going crazy, it may just be time to replace the blue-glass filter.

When I took my Lunt LS60 THa scope out of mothballs this year in readiness for a summer of solar observing I found that the views I was getting of the Sun were terrible, fine detail was completely absent and prominences were no longer visible. Luckily the issue could be tracked down to one component, the blue-glass filter that sits at the entry point to the diagonal containing the blocking filter.

Blocking filter with tarnished blue-glass

Over time (I’ve had my scope since 2014) humidity can affect the surface of the blue-glass filter causing the tarnishing seen in the images below, I was amazed I could see anything through such a compromised filter, it having turned almost completely opaque. I contacted Lunt through their website www.luntsolarsystems.com and they soon got back to me confirming that they would be able to send a replacement blue-glass filter free of charge via their European distributor Bresser GmbH. Within a few days the replacement filter arrived on my doormat, fantastic customer service, thank you Lunt and Bresser!

Old blue glass filter and the replacement - spot the difference

Replacing the filter couldn’t be easier. If the filter is being removed for the first time the you may have to rub off a small amount of silicone, applied by the manufacturer to ensure the filter remains in place during shipping. Next you can use either some fine pointed pliers or, as in my case, a set of compasses to unscrew the retaining ring, as shown in the image below. Once the ring has been unscrewed you can remove the old filter and pop in the new one. When screwing the retaining ring back on keep going until it is finger tight and then go back a quarter turn to allow for expansion as the filter heats up during a solar observing session.

Removing the old filter

You can now screw the tube back on to your blocking filter and mount it back in the scope. That’s it, you’re done, it really couldn’t be easier. I’m sure my issues were caused by storing my scope in a humid environment with no silica gel in its flight case. Now it sits in the house rather than the observatory and has a few bags of silica gel around the blocking filter to keep humidity to a minimum. Lesson learnt, but easy to fix thanks to the good people at Lunt and Bresser.

Blocking filter ready for action

Something From The Archives!

Back in 1984/85, when I was 17, I wrote some articles for ‘Stardust’, the journal of the Irish Astronomical Association. I’d totally forgotten about this until I found myself rifling through some boxes in the garage of my mother’s house and amazingly unearthed them! They need to be read through the lens of 35 years having passed, but I thought I’d reproduce scanned copies of the articles here for posterity.

Subjects covered are lunar exploration, the search for extra-terrestrial life and building a Dobsonian telescope, enjoy!

The Search For Extra Terrestrial Intelligence - Autumn 1984

Exploration of the Moon - Spring 1985

How To Build A Dobsonian Telescope - Spring 1984

Venus shot through an IR pass & UV filter

Imaging The Planet Venus

Venus is one of the most beautiful sights in the night sky, shining brightly as it hangs above the horizon at dawn or dusk. It is unquestionably a wonderful spectacle, but this low elevation presents a challenge for the astrophotographer as when an object is close to the horizon we are viewing it through a thick layer of atmosphere, conditions which rarely result in sharp images.

The fact that Venus is an inner planet (orbiting between the Sun and the Earth) means that in our skies it never strays too far from the Sun resulting in a low elevation when viewed against a dark sky, especially when it is showing a dramatic large crescent phase.

You might think this means you may as well give up on capturing decent images of our celestial evil twin but never fear, there are weapons in the astrophotography arsenal which mean we can image Venus when it is high in the daytime sky, even when at it’s closest to the Sun (inferior conjunction). In this post I’ll cover some of the techniques that hopefully will result in you producing images of Venus you will be proud of.

One very important point to mention is that you should always exercise caution when using a telescope if the Sun is above the horizon. Always keep the lens/mirror cover on when slewing the scope and NEVER look through the eyepiece if there is even a small chance that the Sun may be in the field of view.

It’s all about the Filter

The key to achieving a sharp image of Venus is to utilise the farthest regions of the visible spectrum and beyond as this will enable you to capture the planet whist the Sun is still above the horizon.

The visible light spectrum is comprised of the following colours, detailed along with the wavelengths they occupy: - Red (625 – 740nm), Orange (590 – 625nm), Yellow (565 – 590nm), Green (520 – 565nm), Cyan (500 – 520nm), Blue (435 – 500nm) & Violet (380 – 435nm).

Electromagnetic Spectrum

As I’ve mentioned in previous posts longer wavelengths of light are less affected by atmospheric turbulence (seeing). I like to think of it in terms of a huge red oil tanker cutting through the waves whereas a small blue rowing boat would be bobbing around inducing sea-sickness in its occupants. The image through a red filter may be comparatively steady while the blue filter may produce an image that appears to ‘boil’ rapidly moving in and out of focus.

IR Pass Filter

Imaging in the near infra-red helps to combat poor seeing (within reason) and also has the added bonus of increasing the contrast between the sky and the object you are imaging, in this case Venus. As shown in the diagram above the normal visible spectral bandwidth is 400 – 700nm, I use a Baader IR Pass filter which blocks the transmission of any wavelength below 670nm (deep red), just inside the visible range, with a transmission peak at 685nm.

One important point to note is that you will need to use a camera that does not have an IR Cut filter as this will block the wavelengths you are trying to capture!

Venus shot using a Baader 685nm IR Pass filter and ASI174MM camera

UV Pass Filter

To the eye Venus appears a featureless white, but if you venture to the shorter wavelengths of the electromagnetic spectrum it is possible to tease out detail within the clouds that envelop the planet. For this I employ a Baader U-Filter (Venus and UV) which allows the bandwidths 320 – 380nm to pass, peaking at 350nm and completely blocking all other wavelengths in the spectral range 200 – 1120nm. Until relatively recently this type of filter was beyond the reach of most amateur astronomers due to their high cost, now they can be picked up for around £200, within the means of most dedicated planetary imagers.

As with IR filters you need to ensure that your planetary camera is sensitive to the relevant wavelengths and as the image produced will be dim an aperture of 8” (~200mm) is recommended. It is also worth noting that some mirror coatings may affect transmission so it is worth doing a little research with respect to your particular setup prior to purchasing this type of filter.

Venus through a Baader U Filter. Much more affected by ‘seeing’ but some cloud detail is now visible

Bringing it all Together

I’ve already gone into detail on the capture and processing of planetary images in a previous post (follow link here) so won’t repeat myself here, instead I’ll concentrate on the steps required to produce a false colour rendering of Venus using the processed images captured using the IR pass and UV filters I have described above.

In Photoshop we will assign the image taken through the IR pass filter to the RED channel, the BLUE channel will use the UV filtered image, but what about GREEN? This channel will use a synthetic green comprising a 50/50 blend of the IR and UV images.

Creating a Synthetic Green Channel

Creating the synthetic green channel is very simple and outlined below:

Open your UV and IR images in PhotoShop

Select and copy the IR image (Ctrl-C, Ctrl-A)

Paste it as a layer onto the UV image (Ctrl-V)

Select the layer containing the IR image and change the opacity to 50%

Flatten the image and name accordingly

Synthetic green channel creation in PhotoShop

The resulting Synthetic green channel image is shown below.

Synthetic green channel comprised of a 50/50 blend of the IR and UV images shown previously

Creating your false colour image

Now you can bring all your images together to create the final false colour render:

Open the processed IR, UV and Synthetic Green images in Photoshop

Select one of the images, copy it and create a new file - (Ctrl-A, Ctrl-C, Ctrl-N). Make sure it is RGB colour, you can also name it at this stage - I tend to use the timestamp of the IR image and also name it something like ‘Venus IRSynGUV)

Creating a new file to save your final image into

Select each of your images in turn and paste into the correct colour channel of the new (4th) image file:

IR image to the RED channel

Synthetic Green to the GREEN channel

UV to the BLUE channel

RGB image constructed using the steps outlined above.

As a final step you may wish to copy your IR image as a luminance layer onto your final IRSynGUV image, this will generally create a crisper, brighter result. You can adjust the opacity of the luminance layer to taste and also alter the levels, rotate the image etc.Once you have completed this you should end up with a result something like that shown below.

Good luck and make sure you enjoy observing this beautiful planet.

Final IR, IR, SynG, UV image

National Maritime Museum - Greenwich

Insight Astronomy Photographer of the Year 2018

For most people autumn in Greenwich means crisp walks amongst trees and colourful leaves in the park or strolls along the side of the Thames with obligatory stops in the many pubs along the way. For me however it can only mean one thing, the annual Insight Astronomy Photographer of the Year awards! I was lucky enough to be shortlisted and as an added bonus my daughter was placed in the Young Astronomy Photographer of the Year competition. We had our invites and made one of the shorter journeys to the ceremony, Wimbledon being much closer to Greenwich than Australia where the winner of the Galaxies prize had travelled from.

This year marked the tenth anniversary of the competition so to celebrate the awards and associated exhibition have moved down the hill from the Royal Observatory to the National Maritime Museum (NMM). The new venue means a larger space in which to host the ceremony and, more importantly, a much larger exhibition gallery enabling this years winning photos to be displayed alongside winning images from previous years. The exhibition is scheduled to remain in the National Maritime Museum for the next few years at least so the extra space (no pun intended) will be a continuing benefit. Freeing up the gallery at the Royal Observatory will mean that a number of themed photographic exhibitions can now be staged celebrating different aspects of space and astronomy as well as marking significant anniversaries.

Drinks reception prior to the ceremony, held in the main entrance hall of the museum

Copyright National Maritime Museum, Greenwich, London

The awards evening started with a champagne reception in the main entrance hall of the NMM. A chance to catch up with old friends, chat with some of the judges and peruse some of the museum’s impressive collection, though my daughter Thea was slightly concerned that the large silver speedboat you can see on the left of the image above would slip it’s cables and fall on her head!

After an hour of mingling it was up the stairs to the Great Map upon which round tables were set and a stage had been erected, very different from previous awards ceremonies which took place in the more intimate surroundings of the Peter Harrison planetarium.

The venue for the ceremony, upon the Great Map at the NMM

Copyright National Maritime Museum, Greenwich, London

The awards were hosted by the impressionist and comedian Jon Culshaw which always guarantees an enjoyable evening. The announcement of the prizes (or calling the bingo as Jon called it) being peppered with contributions from Brian Cox, Patrick Moore, Carl Sagan & Tom Baker to name a few, all voiced with stunning accuracy by Jon.

The category we had been most eagerly awaiting was the Young Astronomy Photographer of the Year and luckily it was the first category in which the winners would be revealed so we didn’t have to endure the suspense too long! The quality of the images in this category never ceases to amaze me and always fills me with hope that the next generation of astrophotographers is more than capable of moving this hobby forward in new and exciting directions. Fabian Dalpiaz deservedly won the category with a beautiful skyscape featuring a moonlit landscape with a meteor lighting up the sky, Thea was placed highly commended for her image of the ‘Inverted Sun’ for which as a family we are justifiably proud, she’s already planning next years submission.

Thea receiving her Highly Commended certificate from Jon Culshaw

Copyright National Maritime Museum, Greenwich, London

It sounds cliched but it is true that every year the quality of images seems to improve, over the rest of the night we were treated to some amazing images, all deserved winners. I’ll make a special mention of Martin Lewis who was placed first and second in the Planets, Comets and Asteroids category with his images ‘Grace of Venus’ and ‘Parade of the Planets’, having won this category last year I was delighted to see Martin take over the title.

Two other photographers really caught my eye, Nicolas Lefaudeux who won the ‘Aurorae’ and ‘Our Sun’ categories with two stunning compositions, his solar eclipse image displaying a mind boggling dynamic range, and Mario Cogo who was placed first and second in the ‘Stars and Nebulae’ category. His image of Rigel and the Witch Head nebula is both technically and aesthetically brilliant.

The overall winner was taken from the category ‘People and Space’ an absolutely beautiful image ‘Transport the Soul’ showing the Moon and Milky way lighting up the alien landscape of Moab, Utah taken by the very talented Brad Goldpaint. Unfortunately Brad couldn’t be there on the night to collect his prize but we were treated to a video message from him where his excitement was clear to see.

All in all a brilliant set of winners, fitting for the tenth year of the competition. They can all be viewed via this link https://www.rmg.co.uk/whats-on/astronomy-photographer-year/galleries/2018

After the ceremony was over Thea and I made a quick dash downstairs to a small corner of the exhibition where we were to be interviewed for the Royal Observatory’s post awards Facebook Live stream. Thea gave a great interview with me standing proudly beside her, then she was off for a quick interview with Sky at Night Magazine, hopefully this new found fame won’t go to her head!

The new Astronomy Photographer of the Year exhibition space

Copyright National Maritime Museum, Greenwich, London

Once the interviews were over we had a chance to have a proper look around the new exhibition space and we weren’t disappointed. The best thing about the increased size of venue is that the exhibition can reveal itself to you, each time you turn a corner you are treated to more and more stunning images. It has also meant that the photographs can be presented on a large scale and that images from previous years can also be shown, sort of a ‘greatest hits’. We were all hugely impressed, though the Royal Observatory still holds a special place in my affections I think the move to the National Maritime Museum has further enhanced this amazing competition.

As a footnote the next day saw an early start to travel back to Greenwich to attend the awards brunch, a chance to eat croissants, drink coffee, chat with the judges and have a more leisurely stroll around the exhibition. Thea and I also took the opportunity to tour the other exhibition spaces in the National Maritime Museum, particularly enjoying the Polar Worlds gallery. Well worth visiting, on your way back from the Royal Observatory of course! The Thursday night saw me making a third trip to Greenwich in as many days to appear on the panel for the fourth ‘Evening of Astrophotography’, a great night with some excellent questions from the audience. Make sure you come next year, enjoy the exhibition and be inspired to look up and try to capture our beautiful night sky for yourself.

Myself and Thea posing in front of her solar image in the new IAPOTY exhibition gallery

Copyright National Maritime Museum, Greenwich, London

Aperture Fever!

What Scope Should I Buy?

One of the questions I get asked most often is:

“I’m interested in getting started in astronomy, can you recommend a telescope?”

The problem with that question is the answer depends on where your interests lie, do you want to use it to image and/or observe the Sun, Moon and planets, the deep sky (stars, nebula, galaxies), or a little bit of everything?

So it really pays to think about how you want to use your scope and the various options available to you before parting with your hard earned cash.

Types of Telescope

Before we go any further it is probably worth briefly describing a little about the history and limitations of the various types of telescope available to the amateur astronomer.

Refractors

A refractor is probably what most people think of when asked to visualise a telescope, and was the first to be systematically employed for astronomy by Galileo Galilei in 1610. If you travel to Syon House just outside London you will see that Englishman Thomas Harriot could also claim some firsts, including being the first to use a refractor to record a telescopic observation of the Moon and sunspots.

Plaque at Syon Park, Brentford celebrating the achievements of Thomas Harroit

In simple terms the refractor focusses light via a bi-convex lens (the objective). You can see from the diagram below that a simple refractor does have a problem in that the glass used to make the objective lens has a refractive index which decreases as the wavelength of light increases. As a result of this if you move the focal plane to the point of blue focus you will see a sharp blue point of light surrounded by a red halo, moving the focal plane to the red point of focus will result in a sharp red point of light with a blue halo, this is called chromatic aberration. A compromise would be moving the focal plane to the ‘circle of least confusion’, but at this point nothing is properly in focus!

Chromatic aberration in a refractor & how to rectify it. Source Wikipedia

Never fear, modern refractors shouldn’t suffer from chromatic aberration due to the efforts of English optician John Dollond (still on the UK high street as the opticians Dollond & Aitchison). He patented the achromat in 1758 which corrects chromatic aberration by using two lenses made of different materials (crown and flint glass). The crown glass converges the light & introduces chromatic aberration, the flint glass element has a refractive index which strongly affects blue light thus correcting the chromatic aberration.

The addition of a third lens (triplet) will bring the green wavelength of light to the same focus as well, these are called apochromats (APOs) resulting in red, green and blue wavelengths all being brought to the same focus. Pretty much every astronomical refractor on the market today will be an APO but I think it is always useful to know what this actually means. Addition of further lenses will offer benefits such as field flattening (round stars at the edge of the field of view) or focal length reduction.

Pros and cons

If you are considering buying a refractor here are some pros and cons. First the good points:

- Refractors are generally stable against temperature changes over the course of a night as the expansion and contraction of the front and back surfaces of the lens tend to cancel each other out.

- They require minimal maintenance as they are sealed unit

- Collimation is not required

- Capable of producing reliable, high quality images

- Compact and easily transportable

On the flip side:

- High quality lenses are much more difficult and expensive to manufacture than mirrors so if you want an aperture comparable with even the smallest of reflecting telescopes it will come with quite a high price tag.

- Traditional refractors tend to have quite a long focal length meaning that the image is spread over a large area of the focal plane effectively dimming the image, however refractors with short focal lengths (‘fast’) are available and there are some reasonably priced, good quality fast refractors on the market.

Reflecting Telescopes

Reflecting telescopes, as the name suggests, use mirrors to collect and focus light and so are free from chromatic aberration. This doesn’t mean that reflectors are problem free, with a spherical mirror (the mirror being a section of a sphere) light rays from the edge of the mirror achieve focus nearer the mirror than those from the centre. This causes blurring known as spherical aberration. You may have heard that term before as this is what plagued the Hubble Space Telescope before the shuttle mission to repair it. Spherical aberration is eliminated by using a parabolic mirror where the reflected light achieves focus at the same point.

In the section on refractors you may recall I mentioned field flattening, this corrects yet another aberration called off-axis aberration where objects at the edge of the field of view suffer from various types of distortion, coma being the one that causes the most angst. At the centre of the field your stars may be sharp points but at the edge they may appear distorted and comet like (hence the name). Field curvature is also a common issue where the best focus lies on a curved plane resulting in the stars at the edge being blurred, field flattening lenses are employed to correct this issue. I’ll touch upon how these problems have been addressed in the various designs available.

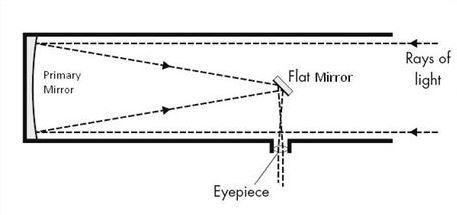

Newtonian – Isaac Newton is credited with making the first reflecting telescope in 1668 coming up with the eponymous design shown in the diagram below. The telescope consists of two mirrors, the primary which is a concave parabola and the secondary which is flat. The primary collects the light and the secondary, inclined at an angle of 45 degrees, directs it so that the point of focus is to the side of the primary mirror. This design still works well for small amateur scopes but for larger scopes this configuration becomes impractical.

Newtonian Reflector. Credit: oneminuteastronomer.com

Cassegrain - Hot on the heals of Newton in 1672 Laurent Cassegrain developed the design which carries his name. The primary mirror is the same as in the Newtonian design but the secondary, rather than being flat is convex. This reflects the light back to the centre of the primary where it then passes through a hole and comes to focus just behind it. This has the benefit of enabling instrumentation to be fitted without the balance problems of a Newtonian design and delivers long focal lengths without the corresponding increase in the length of the optical tube.

Cassegrain Reflector. Credit Vik Dhilon (http://slittlefair.staff.shef.ac.uk/teaching/phy217/lectures/telescopes/L07/index.html

Ritchey-Chretien - Remember off-axis aberrations? The Newtonian and Cassegrain designs both suffer from coma so to rectify this George Ritchey and Henri Chretien jointly developed the Ritchey-Chretien telescope in the early 1900s. This is basically a modified Cassegrain with a concave hyperbolic primary and concave hyperbolic secondary, removing spherical aberration and coma and reducing the effects of field curvature. The payoff is that the secondary mirror is larger and covers some of the primary but imaging performance over a wide field of view is much improved on the Newtonian or Cassegrain designs. For the amateur they do tend to be expensive due to the increased complexity of producing hyperbolic mirrors.

Catadioptric Designs

Celestron Edge HD Schmidt-Cassegrain Telescope in my home observatory

You don’t have to limit yourself to only using mirrors or lenses, you can of course combine the two in what is known as a catadioptric telescope. Three of the most common are the Schmidt, Maksutov-Cassegrain and Schmidt-Cassegrain.

Schmidt –Also known as the Schmidt Camera, was designed to image large fields of view. A spherical primary mirror receives its light through a thin aspherical lens (corrector plate) which compensates for spherical aberrations. The spherical mirror means that this design is free from astigmatism.

Maksutov-Cassegrain – This design combines a spherical mirror with a weakly negative meniscus lens or corrector plate which corrects the problems of off-axis and chromatic aberration. Patented in 1941 by Russian optician Dmitri Dmitrievich Maksutov it is based on the Schmidt camera and uses the spherical errors of a negative lens to correct the errors of the spherical primary mirror. In the Cassegrain version there is an integrated secondary using all spherical elements, simplifying the build and making this design cheap to manufacture. A 127mm ‘Mak’ makes a great first telescope.

Schmidt-Cassegrain - As the name suggests this is a hybrid of the Schmidt and Cassegrain designs. The big advantage of this design for the amateur astronomer is that it is cheap to mass produce as it uses spherical mirrors, spherical aberration being dealt with by a corrector plate. This design does suffer from off-axis aberrations as the corrector lens is not placed at the centre of curvature, this is corrected for in more expensive models of Schmidt-Cassegrain such as Celestron’s Edge HD range. This design delivers a long focal length in a short tube resulting in a powerful, compact and portable scope. The focal ratio is typically f/10 and they come in a range of apertures up to around 14” (356mm). There is a practical upper limit of around 16” (406mm) as manufacture of a corrector for larger apertures is costly and can suffer from flexure making it impractical to go much larger with this design.

Schmidt-Cassegrain. Credit:oneminuteastronomer.com

Pros and cons

If you are considering buying a reflector or catadioptric scope here are some pros and cons. First the good points:

- Reflectors and catadioptric telescopes deliver much greater apertures and hence light gathering power than refractors in a similar price range.

- The compact design of Schmidt-Cassegrain and Maksutov-Cassegrain scopes deliver long focal lengths in a small package (for smaller aperture scopes). Anything up to an 8” scope (203mm) will be easy to handle and transport and the smaller 5” (127mm) ‘Maks’ can fit in a small (ish) bag while delivering typical focal lengths of 1500mm.

On the flip side:

- They can suffer from changes in temperature through the course of an observing session so you need to be mindful that focus may shift.

- Newtonian and truss designs are not sealed units so suffer from collimation issues. You will have to learn how to collimate your scope to achieve the best results. Collimation will also have to be performed on Schmidt-Cassegrains but if you are not transporting it regularly this will not be as much of an issue as with a Newtonian for example. The good news is collimation should not be an issue with Maksutov-Cassegrain scopes.

- They can be large and cumbersome, especially the Newtonian design where the focal length of the scope is reflected in its length rather than being folded as in the Cassegrain design.

Imaging versus Observing

Of course you aren’t just going to buy a telescope, you are also going to be buying something for it to sit on so think about whether you will primarily use your scope for visual observing or want to try your hand at astrophotography. This is important as it will have a bearing on the type of mount you will require.

For the beginner in visual astronomy and astrophotography I would recommend a driven ‘Go-To’ mount. Once properly aligned all you will need to do is select the target you want to observe on the hand controller, or in some cases via an app. This is particularly useful in urban locations as often you simply can’t see the stars required to star-hop to an object, and anyway this is a tricky skill to learn when just setting out.

There are some bargains to be had if you go down the un-driven route with ‘Dobsonian’ scopes offering large aperture at relatively low prices but I would leave these until you are more familiar with the sky and have established where your interests lie. It can be a frustrating activity trying to find an object and keep it in view at high magnification!

If you are a visual observer or are just planning on shooting the Sun, Moon or planets then you should be able to get away with a simple alt-azimuth driven mount. These track the object you have selected in two axes in a kind of stepping motion so your target will always stay in view, but this won’t be suitable for long exposure photography. For long exposures you will require a sturdy, polar aligned equatorial mount. This tracks in one axis meaning that the scope will smoothly track your chosen object from east to west. Equatorial mounts are more complex to set up and align and are normally much heavier than a simple ‘Alt-Az’ so if you think this may be a problem for you start off with the simpler Alt-Azimuth mount. You can always purchase an equatorial mount later as your interest develops while retaining your scope.

Celestron Advanced VX Equatorial mount

The Sun, Moon and Planets

The primary consideration when photographing or observing the Sun, Moon or planets is image scale, you want your scope to deliver high magnification so a long focal length is required. Traditionally the recommendation would have been a long focal length refractor but these are expensive, or if cheap low quality so I would recommend a Schmidt-Cassegrain or Matsukov-Cassegrain design. Go for the largest aperture you can afford in your price range as at long focal lengths light gathering power and resolution is important. If you go for an 8” scope you’ll find this should serve you well for years.

The Deep Sky

If your interests are in observing star clusters, galaxies such as The Andromeda Galaxy M31 or the Orion Nebula M42 then high magnification is not what you are after. Here a fast refractor will produce beautiful wide field views and a small objective lens should not be a problem.

A lot of people don’t appreciate the size of many deep sky objects, M31 covers an area of over 3 degrees, that’s 6 full Moon widths so you need a wide field of view to fit it in! A 65mm f/6 refractor will provide you with lovely wide field views and will also enable you to take beautiful images when coupled with a camera and the right mount. When looking at potential purchases bear in mind the issues mentioned in the refractor section of this post. You want to make sure it is an APO and if possible also delivers a flat field giving you round stars right to the edge of the field of view.

A Little Bit of Everything

It’s likely that if you are just starting out you won’t know exactly where your interests lie. If this is the case I would lean towards a scope that is going to deliver good views of the Sun (with a suitable filter), Moon and Planets while also enabling you to view brighter deep sky objects such as open and globular clusters. A 5” (127mm) or 6” (152mm) Schmidt-Cassegrain or Maksutov design will serve as a good all-rounder, delivering reasonable aperture and focal length.

A word on Eyepieces

First you need to know how to calculate the magnification your eyepiece will deliver. All you need to do is work out the focal length of your scope by multiplying the aperture in mm by the focal ratio, so a scope with a 203mm aperture and focal ratio of f/10 will have a focal length of 2030mm. The magnification provided by your eyepiece is calculated by dividing the focal length of the telescope by the focal length of the eyepiece, so a 25mm eyepiece will deliver a magnification of 2030/25 = 81 a magnification of 81 times (81x).

Typically a telescope will be supplied with a 25mm eyepiece, you may want to supplement this with a 9mm for higher magnification or invest in a Barlow lens or Powermate. These will effectively increase the focal length of you scope by a stated amount, e.g. 2.5x , so your 2030mm scope will deliver an effective focal length of 5075mm with a 2.5x Barlow or Powermate, with a 25mm eyepiece delivering a magnification of 203x. Barlows and Powermates are especially useful for high resolution planetary, lunar and solar imaging, so if you think this is going to be your thing then I would recommend investing in one.

Generally the eyepieces supplied with cheaper telescopes will not be of great quality but will suffice when starting out. If you do decide the hobby is for you it makes sense to upgrade to a more expensive model at some stage.

Still Can’t Decide?

Here is a summary and a few key points to consider:

- Don’t run before you can walk. Start small while you dip your toes in the water, if you get the astronomy bug there’s plenty time to upgrade as your skills develop.

- The best scope for you is the one you will use. Don’t choose something that is heavy or difficult to transport and set up. You’ll end up cursing it and coming up with excuses not to spend a night under the stars.

- For planetary, lunar and solar observing and imaging you need a long focal length.

- If you want to observe or photograph the deep sky a short focal length is best, ideally coupled with a large aperture.

- If you want to take long exposures you’ll need an equatorial mount.

- If you are still unsure about what you should buy you could go for a pair of binoculars. A pair of 10x50s will give great wide-field views, are relatively cheap, easily transportable and can be used for other things if you decide astronomy isn’t your thing after all.

A Cheat's Guide to Creating Smooth Planetary Animations

In a previous post I detailed how to capture and process high resolution planetary images, but what if you want to do something a little different with that hard earned data? A planetary animation is a really fun way of presenting your images and isn’t as hard to achieve as you might think, though it does require a little perseverance.

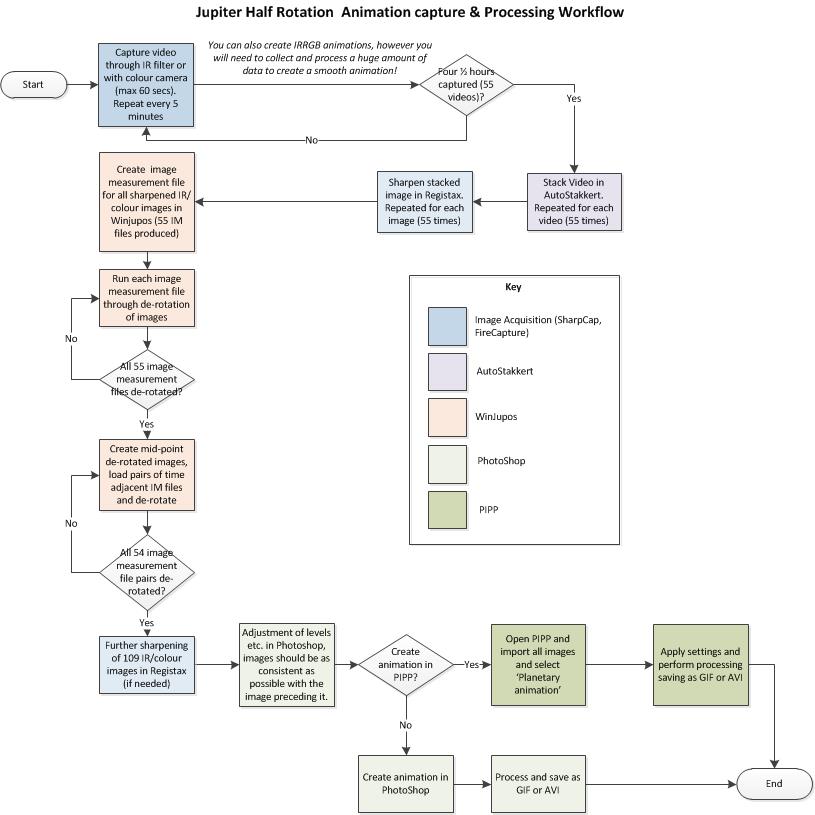

The workflow is shown below and described in the remainder of this post.

Planetary Animation Workflow

Choosing a Target

In my guide to planetary imaging and processing previously posted on this site I cover planetary rotation periods and the challenges they present in the capture of high resolution images. When creating planetary animations high rotation rates are no longer your enemy, but your friend.

To gain the best results we want to choose a planet with a fast rotation period and clear surface features, basically Mars and Jupiter. All the other planets either rotate too slowly or do not have enough surface detail to enable movement to be tracked by the eye. One possible exception is Venus, where if you are shooting through IR and UV filters you may be able to show some movement of the cloud layers high in the Venusian atmosphere.

How Many Frames Should I Shoot?

From my planetary imaging post you will see that Mars has a rotation period of 24.6 hours and Jupiter one of 9.9 hours. If shooting Jupiter over a 4 ½ hour period you will be able to capture half a rotation of the planet, and if you time your captures with ingress and egress of the Great Red Spot you will have all the makings of a striking animation. I have found the website Calsky really useful in planning this type of project (www.calsky.com), choose planets from the top menu, then choose the planet you are interested in and then ‘Apparent View Data’, you can then play with the dates and time to see what your best window of opportunity is.

The time of year you will be imaging is also a factor as you will need enough hours of darkness to capture all your frames, something that is a bit of a challenge here in the northern hemisphere as Mars and Jupiter both reach their best at a time when darkness is at a premium! With Jupiter video shot over a period of as little as 30 minutes will still produce a nice result, so you don't have to go the full 4 1/2 hours!

The method you need to employ to capture your images is described in my guide to planetary imaging and processing, the difference being you will be taking a lot more shots of the same target. Say you aim to capture an image every 5 minutes, if you shoot using a colour camera or through one filter with a mono camera (IR or Red) you will end up with 12 images an hour which will yield 55 images over a 4 ½ hour period. If you wanted to produce a colour rotation video with a mono camera you would end up with 4 times that number of videos to process, a hard drive busting 220 videos! So I stick to mono through one filter, but you may be more dedicated than me!

Gaining a Smooth and Consistent Result

The key to a good rotation video is smoothness and consistency. The more images you have the smoother your result will be, this is where WinJupos comes to your rescue as it will enable you to generate additional images without having to shoot more video. Here are the steps you need to take (details on how to perform these actions can be found in my planetary imaging post):

- Capture and process each of you videos as normal (stack, sharpen etc.)

- In WinJupos create an image measurement (IM) file for each of the processed images and de-rotate each individual IM file, you should now have 55 WinJupos generated images (for 4 1/2 hours worth of data) .

- Now load each contiguous pair of IM files and de-rotate them e.g. load timestamp 00:00:00 & 00:05:00 the result will be the mid-point between the two, if you do this for each adjacent pair you will end up with 54 extra images to add to your rotation video. Almost double what you started with! Now you have a healthy 109 images to make up your rotation video with an effective gap of 2 ½ minutes between each frame which should yield a lovely smooth result.

Once all the images making up the rotation video have been generated and further sharpened it is a good idea to open each adjacent frame up side by side in PhotoShop and check that there are no large variations in levels etc. as this will affect the quality of your final animation. Try to make each adjacent frame as consistent as possible, tedious but well worth it in the end.

Generating Your Animation

Here I'll discuss how to generate your animation using the free software Planetary Imaging PreProcessor (PIPP - https://sites.google.com/site/astropipp/). There are lots of animation applications out there, for example, you can use PhotoShop to create a frame animation, or WinJupos to create an animation using a surface map pasted onto a sphere. Both these methods can yield great results but I'll limit myself to PIPP for now.

Creating a Planetary Animation in PIPP

On opening PIPP you will see a number of tabs I'll go through the relevant ones in order.

Source Files - This tab is defaulted when you open the application. Here you need to click [Add Image Files] and select the frames you want to use for your animation. Make sure they are loaded in order, if they are timestamped they will automatically load in the correct sequence, otherwise you may need to make sure the start frame is at the top of the list and the last frame at the end with all intervening frames in the correct order. Once you've opened your images a pop up will inform you that join mode has been selected, this is what you want so just OK the message. Make sure the Planetary Animation box is ticked, this will optimise the settings in the other tabs automatically.

Choose the images making up your animation

Images loaded into PIPP, Join mode selected and Planetary Animation ticked

Processing Options - The selections in this tab will be defaulted to those the software determines as optimal for planetary animation, however you may want to alter some of these. I've found that the object detection threshold may need to be amended if your images are quite dim, I also find that it is worthwhile enabling cropping so that you don't end up with a lots of blank space around your subject. There are handy 'test options' buttons which enable you to check that the options you have selected provide the desired result, if not just tweak them until they do.

Processing options with object detection changed and cropping enabled

Animation Options - Here you can select how you want your frames to be played i.e. forwards, backwards, number of repeats, delay between repeats etc. Don't make the delay too long, just enough to show it is cycling through the images again is what you want.

Animation Options

Output Options - In this tab you can choose where you want your animation to be saved, the output format, quality and the playback frames per second. I've found that 10 frames per second gives a nice result, so aim for that if you have enough images, if you have a large number of input images you can go even higher - the higher the frames per second the smoother the final result. You can save as an AVI or GIF. I tend to save my animation as an AVI and convert it to a GIF in Photoshop, I describe how you do this in a later section of this post.

Output Options

Do Processing - Once you are happy with the settings you have chosen go to the Do Processing tab and click [Start Processing]. The AVI or GIF will then be generated and saved to the location you specified in the output options tab. If you get a processing error where the object has not been detected just go back and change your object detection threshold and try again. Your planetary animation should now ready to enjoy and share!

Image processing in PIPP and saved AVI

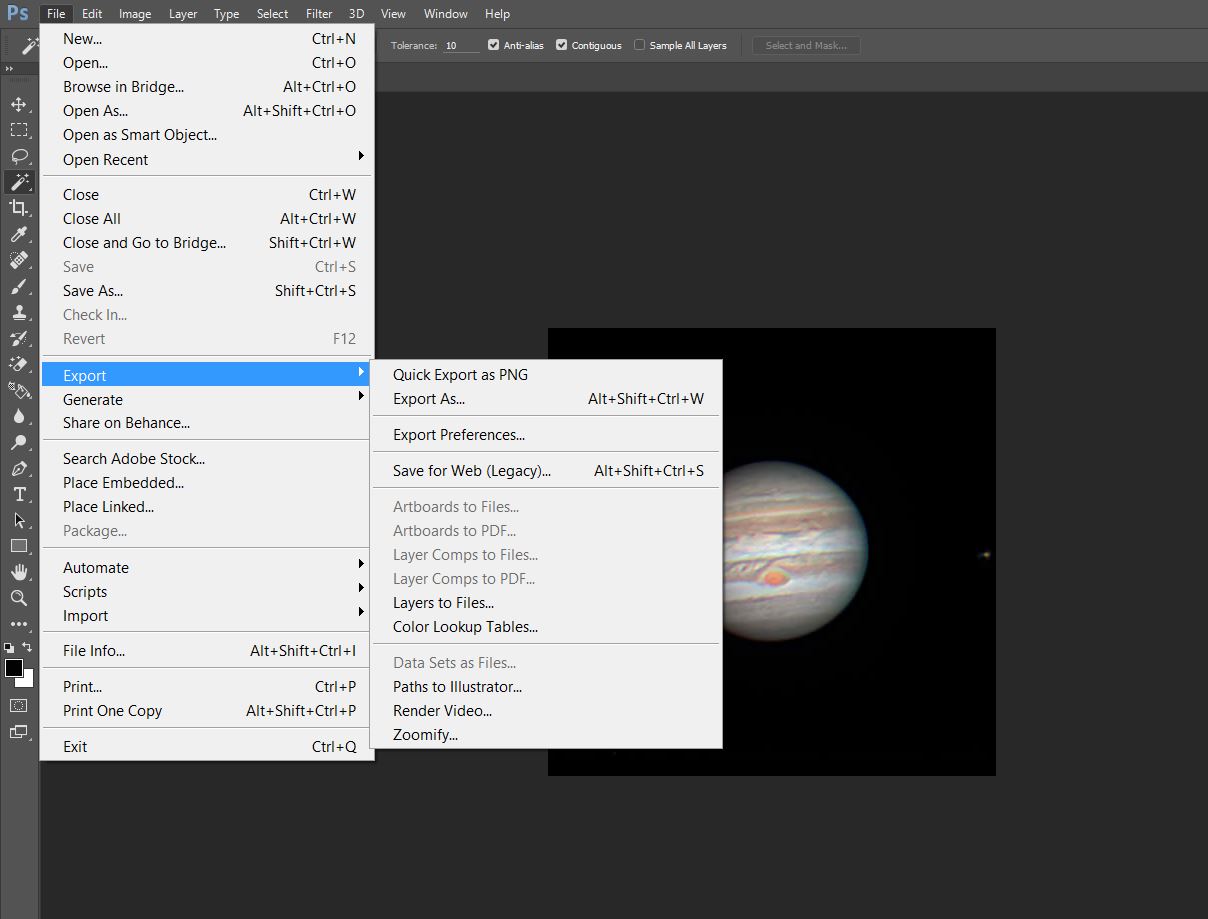

Converting Your video to a .gif in photoshop

While you can save your output as a .GIF in PIPP I tend to do this as an additional step in PhotoShop, its not essential but I find it gives a slightly better result. If you don't have PhotoShop or are happy with the output from PIPP then you don't need to perform this step, if you do want to try it here's what you need to do.

Open Photoshop (obviously)

Go to [File] [Import] [Video Frames to Layers..]

Import video file as layers in PhotoShop

When the options box below appears ensure 'Make Frame Animation' is checked. You can also choose whether you want to limit the frames processed, though generally for planetary animations you would want all frames.

Choose the number of frames you want to include and Ensure Make frame animation is checked

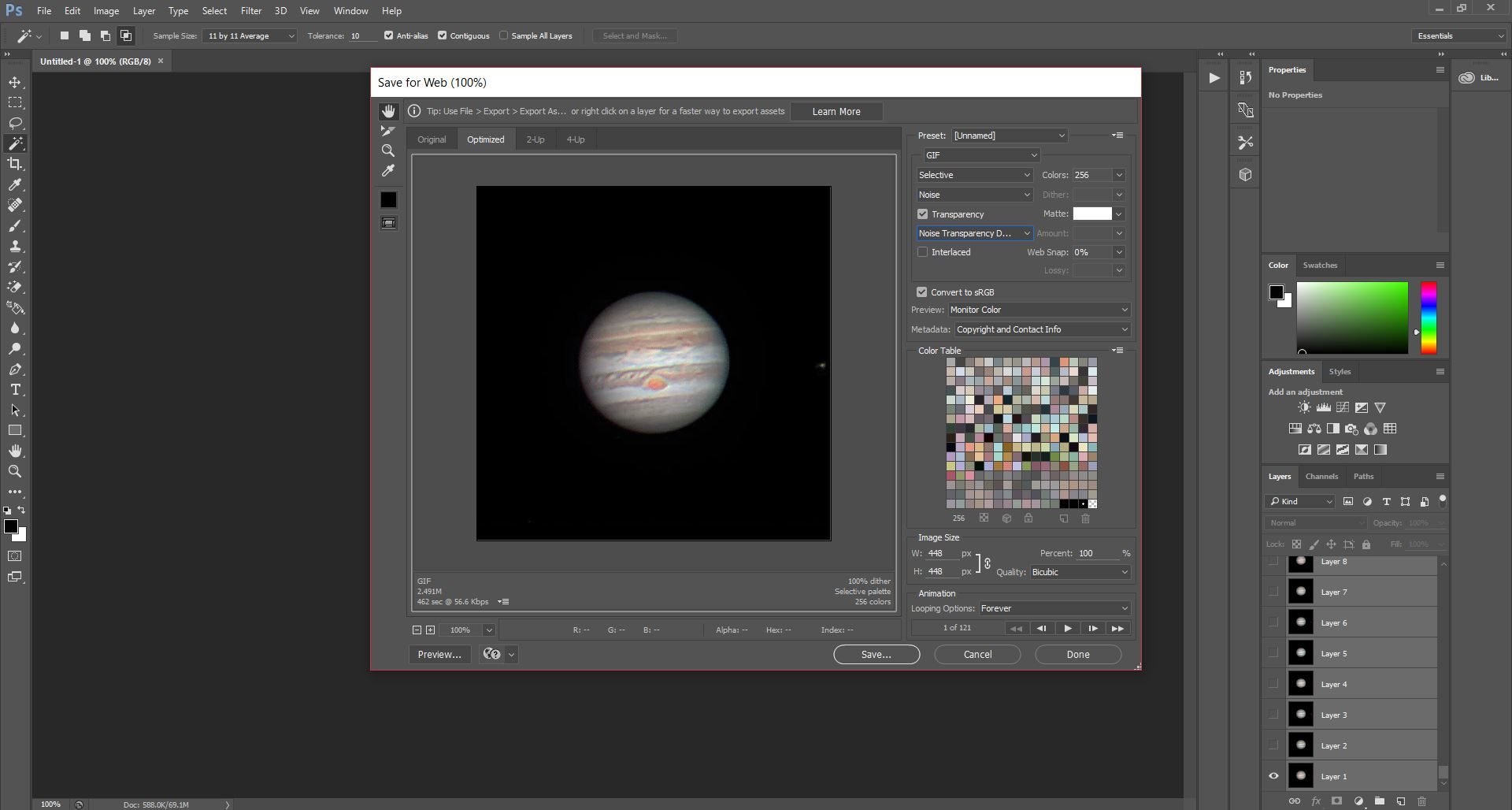

Select [File] [Export] [Save for Web (Legacy)…], may just be [File] [Save for Web] in older versions

Go to File, Export, Save for Web

You can choose various options from the menus within the GIF screen presented, generally I just set the noise settings as shown.

Choose your options from the gif screen

Now save and share!

Jupiter

A Guide to Planetary Imaging & Processing

In these days of smartphones and sophisticated DSLRs it has never been easier to capture images of the Moon or planets by placing your phone camera at the eyepiece or attaching a DSLR to the back of your scope and shooting video or taking a photo.

The results can be pretty impressive, but what if you want to capture the planets in more detail? This is a much more involved process which I’ve summarised in the chart below, hopefully you won’t find this too intimidating, but even if you do read on and I’ll attempt to guide you through the steps needed to produce your own detailed images of the planets.

High Resolution Planetary Imaging work-flow

Image Acquisition

I’ve covered the importance of seeing, focus and image scale in an earlier post - High Resolution imaging of the Moon (http://www.thelondonastronomer.com/it-is-rocket-science/2018/4/12/high-resolution-imaging-of-the-moon), so won’t restate the criticality of these factors here. This section covers what I believe are the key points to consider regarding camera settings and capture duration, assuming that you are imaging at the longer focal lengths required for high resolution planetary photography.

Capture Duration

Just as the Earth rotates on its axis, so do the other planets in our Solar System, some faster and some much slower. This rotation can have a significant effect on the sharpness of your images as features will smear when stacked due to their movement over the capture period, the longer the duration of your capture video the worse it will be.

This blurring can be mitigated by using software such as WinJupos and AutoStakkert (which I will cover in detail later), but I try to keep my ‘sub-captures’ within the limits detailed below, and the overall capture period (remember we are taking multiple sets of data) in the case of Jupiter to around 30 minutes to reduce blurring around the limbs of the planet.